Is perhaps the most important technical tools in commutative algebra. In this post we are covering definitions and simple properties. Also we restrict ourselves into ring theories and no further than that. Throughout, we let $A$ be a commutative ring. With extra effort we can also make it to non-commutative rings for some results but we are not doing that here.

In fact the construction of $\mathbb{Q}$ from $\mathbb{Z}$ has already been an example. For any $a \in \mathbb{Q}$, we have some $m,n \in \mathbb{Z}$ with $n \neq 0$ such that $a = \frac{m}{n}$. As a matter of notation we may also say an ordered pair $(m,n)$ determines $a$. Two ordered pairs $(m,n)$ and $(m’,n’)$ are equivalent if and only if

But we are only using the ring structure of $\mathbb{Z}$. So it is natural to think whether it is possible to generalize this process to all rings. But we are also using the fact that $\mathbb{Z}$ is an entire ring (or alternatively integral domain, they mean the same thing). However there is a way to generalize it. $\def\mfk{\mathfrak}$

Multiplicatively closed subset

(Definition 1) A multiplicatively closed subset $S \subset A$ is a set that $1 \in S$ and if $x,y \in S$, then $xy \in S$.

For example, for $\mathbb{Z}$ we have a multiplicatively closed subset

We can also insert $0$ here but it may produce some bad result. If $S$ is also an ideal then we must have $S=A$ so this is not very interesting. However the complement is interesting.

(Proposition 1) Suppose $A$ is a commutative ring such that $1 \neq 0$. Let $S$ be a multiplicatively closed set that does not contain $0$. Let $\mfk{p}$ be the maximal element of ideals contained in $A \setminus S$, then $\mfk{p}$ is prime.

Proof. Recall that $\mfk{p}$ is prime if for any $x,y \in A$ such that $xy \in \mfk{p}$, we have $x \in \mfk{p}$ or $y \in \mfk{p}$. But now we fix $x,y \in \mfk{p}^c$. Note we have a strictly bigger ideal $\mfk{q}_1=\mfk{p}+Ax$. Since $\mfk{p}$ is maximal in the ideals contained in $A \setminus S$, we see

Therefore there exist some $a \in A$ and $p \in \mfk{p}$ such that

Also, $\mfk{q}_2=\mfk{p}+Ay$ has nontrivial intersection with $S$ (due to the maximality of $\mfk{p}$), there exist some $a’ \in A$ and $p’ \in \mfk{p}$ such that

Since $S$ is closed under multiplication, we have

But since $\mfk{p}$ is an ideal, we see $pp’+p’ax+pa’y \in \mfk{p}$. Therefore we must have $xy \notin \mfk{p}$ since if not, $(p+ax)(p’+a’y) \in \mfk{p}$, which gives $\mfk{p} \cap S \neq \varnothing$, and this is impossible. $\square$

As a corollary, for an ideal $\mfk{p} \subset A$, if $A \setminus \mfk{p}$ is multiplicatively closed, then $\mfk{p}$ is prime. Conversely, if we are given a prime ideal $\mfk{p}$, then we also get a multiplicatively closed subset.

(Proposition 2) If $\mfk{p}$ is a prime ideal of $A$, then $S = A \setminus \mfk{p}$ is multiplicatively closed.

Proof. First $1 \in S$ since $\mfk{p} \neq A$. On the other hand, if $x,y \in S$ we see $xy \in S$ since $\mfk{p}$ is prime. $\square$

Ring of fractions of a ring

We define a equivalence relation on $A \times S$ as follows:

(Proposition 3) $\sim$ is an equivalence relation.

Proof. Since $(as-as)1=0$ while $1 \in S$, we see $(a,s) \sim (a,s)$. For being symmetric, note that

Finally, to show that it is transitive, suppose $(a,s) \sim (b,t)$ and $(b,t) \sim (c,u)$. There exist $u,v \in S$ such that

This gives $bsv=atv$ and $buw = ctw$, which implies

But $tvw \in S$ since $t,v,w \in S$ and $S$ is multiplicatively closed. Hence

$\square$

Let $a/s$ denote the equivalence class of $(a,s)$. Let $S^{-1}A$ denote the set of equivalence classes (it is not a good idea to write $A/S$ as it may coincide with the notation of factor group), and we put a ring structure on $S^{-1}A$ as follows:

There is no difference between this one and the one in elementary algebra. But first of all we need to show that $S^{-1}A$ indeed form a ring.

(Proposition 4) The addition and multiplication are well defined. Further, $S^{-1}A$ is a commutative ring with identity.

Proof. Suppose $(a,s) \sim (a’,s’)$ and $(b,t) \sim (b’,t’)$ we need to show that

or

There exists $u,v \in S$ such that

If we multiply the first equation by $vtt’$ and second equation by $uss’$, we see

which is exactly what we want.

On the other hand, we need to show that

That is,

Again, we have

Hence

Since $uv \in S$, we are done.

Next we show that $S^{-1}A$ has a ring structure. If $0 \in S$, then $S^{-1}A$ contains exactly one element $0/1$ since in this case, all pairs are equivalent:

We therefore only discuss the case when $0 \notin S$. First $0/1$ is the zero element with respect to addition since

On the other hand, we have the inverse $-a/s$:

$1/1$ is the unit with respect to multiplication:

Multiplication is associative since

Multiplication is commutative since

Finally distributivity.

Note $ab/cb=a/c$ since $(abc-abc)1=0$. $\square$ $\def\mb{\mathbb}$

Cases and examples

First we consider the case when $A$ is entire. If $0 \in S$, then $S^{-1}A$ is trivial, which is not so interesting. However, provided that $0 \notin S$, we get some well-behaved result:

(Proposition 5) Let $A$ be an entire ring, and let $S$ be a multiplicatively closed subset of $A$ that does not contain $0$, then the natural map

is injective. Therefore it can be considered as a natural inclusion. Further, every element of $\varphi_S(S)$ is invertible.

Proof. Indeed, if $x/1=0/1$, then there exists $s \in S$ such that $xs=0$. Since $A$ is entire and $s \neq 0$, we see $x=0$, hence $\varphi_S$ is entire. For $s \in S$, we see $\varphi_S(s)=s/1$. However $(1/s)\varphi_S(s)=(1/s)(s/1)=s/s=1$. $\square$

Note since $A$ is entire we can also conclude that $S^{-1}A$ is entire. As a word of warning, the ring homomorphism $\varphi_S$ is not in general injective since, for example, when $0 \in S$, this map is the zero.

If we go further, making $S$ contain all non-zero element, we have:

(Proposition 6) If $A$ is entire and $S$ contains all non-zero elements of $A$, then $S^{-1}A$ is a field, called the quotient field or the field of fractions.

Proof. First we need to show that $S^{-1}A$ is entire. Suppose $(a/s)(b/t)=ab/st =0/1$ but $a/s \neq 0/1$, we see however

Since $A$ is entire, $b$ has to be $0$, which implies $b/t=0/1$. Second, if $a/s \neq 0/1$, we see $a \neq 0$ and therefore is in $S$, hence we’ve found the inverse $(a/s)^{-1}=s/a$. $\square$

In this case we can identify $A$ as a subset of $S^{-1}A$ and write $a/s=s^{-1}a$.

Let $A$ be a commutative ring, an let $S$ be the set of invertible elements of $A$. If $u \in S$, then there exists some $v \in S$ such that $uv=1$. We see $1 \in S$ and if $a,b \in S$, we have $ab \in S$ since $ab$ has an inverse as well. This set is frequently denoted by $A^\ast$, and is called the group of invertible elements of $A$. For example for $\mb{Z}$ we see $\mb{Z}^\ast$ consists of $-1$ and $1$. If $A$ is a field, then $A^\ast$ is the multiplicative group of non-zero elements of $A$. For example $\mb{Q}^\ast$ is the set of all rational numbers without $0$. For $A^\ast$ we have

If $A$ is a field, then $(A^\ast)^{-1}A \simeq A$.

Proof. Define

Then as we have already shown, $\varphi_S$ is injective. Secondly we show that $\varphi_S$ is surjective. For any $a/s \in (A^\ast)^{-1}A$, we see $as^{-1}/1 = a/s$. Therefore $\varphi_S(as^{-1})=a/s$ as is shown. $\square$

Now let’s see a concrete example. If $A$ is entire, then the polynomial ring $A[X]$ is entire. If $K = S^{-1}A$ is the quotient field of $A$, we can denote the quotient field of $A[X]$ as $K(X)$. Elements in $K(X)$ can be naturally called rational polynomials, and can be written as $f(X)/g(X)$ where $f,g \in A[X]$. For $b \in K$, we say a rational function $f/g$ is defined at $b$ if $g(b) \neq 0$. Naturally this process can be generalized to polynomials of $n$ variables.

Local ring and localization

We say a commutative ring $A$ is local if it has a unique maximal ideal. Let $\mfk{p}$ be a prime ideal of $A$, and $S = A \setminus \mfk{p}$, then $A_{\mfk{p}}=S^{-1}A$ is called the local ring of $A$ at $\mfk{p}$. Alternatively, we say the process of passing from $A$ to $A_\mfk{p}$ is localization at $\mfk{p}$. You will see it makes sense to call it localization:

(Proposition 7) $A_\mfk{p}$ is local. Precisely, the unique maximal ideal is

Note $I$ is indeed equal to $\mfk{p}A_\mfk{p}$.

Proof. First we show that $I$ is an ideal. For $b/t \in A_\mfk{p}$ and $a/s \in I$, we see

since $a \in \mfk{p}$ implies $ba \in \mfk{p}$. Next we show that $I$ is maximal, which is equivalent to show that $A_\mfk{p}/I$ is a field. For $b/t \notin I$, we have $b \in S$, hence it is legit to write $t/b$. This gives

Hence we have found the inverse.

Finally we show that $I$ is the unique maximal ideal. Let $J$ be another maximal ideal. Suppose $J \neq I$, then we can pick $m/n \in J \setminus I$. This gives $m \in S$ since if not $m \in \mfk{p}$ and then $m/n \in I$. But for $n/m \in A_\mfk{p}$ we have

This forces $J$ to be $A_\mfk{p}$ itself, contradicting the assumption that $J$ is a maximal ideal. Hence $I$ is unique. $\square$

Example

Let $p$ be a prime number, and we take $A=\mb{Z}$ and $\mfk{p}=p\mb{Z}$. We now try to determine what do $A_\mfk{p}$ and $\mfk{p}A_\mfk{p}$ look like. First $S = A \setminus \mfk{p}$ is the set of all entire numbers prime to $p$. Therefore $A_\mfk{p}$ can be considered as the ring of all rational numbers $m/n$ where $n$ is prime to $p$, and $\mfk{p}A_\mfk{p}$ can be considered as the set of all rational numbers $kp/n$ where $k \in \mb{Z}$ and $n$ is prime to $p$.

$\mb{Z}$ is the simplest example of ring and $p\mb{Z}$ is the simplest example of prime ideal. And $A_\mfk{p}$ in this case shows what does localization do: $A$ is ‘expanded’ with respect to $\mfk{p}$. Every member of $A_\mfk{p}$ is related to $\mfk{p}$, and the maximal ideal is determined by $\mfk{p}$.

Let $k$ be a infinite field. Let $A=k[x_1,\cdots,x_n]$ where $x_i$ are independent indeterminates, $\mfk{p}$ a prime ideal in $A$. Then $A_\mfk{p}$ is the ring of all rational functions $f/g$ where $g \notin \mfk{p}$. We have already defined rational functions. But we can go further and demonstrate the prototype of the local rings which arise in algebraic geometry. Let $V$ be the variety defined by $\mfk{p}$, that is,

Then what about $A_\mfk{p}$? We see since for $f/g \in A_\mfk{p}$ we have $g \notin \mfk{p}$, therefore for $g(x)$ is not equal to $0$ almost everywhere on $V$. That is, $A_\mfk{p}$ can be identified with the ring of all rational functions on $k^n$ which are defined at almost all points of $V$. We call this the local ring of $k^n$ along the variety $V$.

Universal property

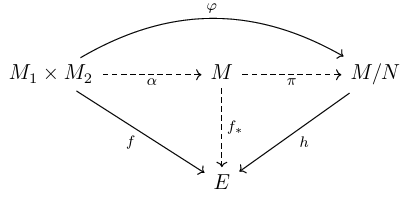

Let $A$ be a ring and $S^{-1}A$ a ring of fractions, then we shall see that $\varphi_S:S \to S^{-1}A$ has a universal property.

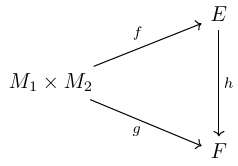

(Proposition 8) Let $g:A \to B$ be a ring homomorphism such that $g(s)$ is invertible in $B$ for all $s \in S$, then there exists a unique homomorphism $h:S^{-1}A \to B$ such that $g = h \circ \varphi_S$.

Proof. For $a/s \in S^{-1}A$, define $h(a/s)=g(a)g(s)^{-1}$. It looks immediate but we shall show that this is what we are looking for and is unique.

Firstly we need to show that it is well defined. Suppose $a/s=a’/s’$, then there exists some $u \in S$ such that

Applying $g$ on both side yields

Since $g(x)$ is invertible for all $s \in S$, we therefore get

It is a homomorphism since

and

they are equal since

Next we show that $g=h \circ \varphi_S$. For $a \in A$, we have

Finally we show that $h$ is unique. Let $h’$ be a homomorphism satisfying the condition, then for $a \in A$ we have

For $s \in S$, we also have

Since $a/s = (a/1)(1/s)$ for all $a/s \in S^{-1}A$, we get

That is, $h’$ (or $h$) is totally determined by $g$. $\square$

Let’s restate it in the language of category theory (you can skip it if you have no idea what it is now). Let $\mfk{C}$ be the category whose objects are ring-homomorphisms

such that $f(s)$ is invertible for all $s \in S$. Then according to proposition 5, $\varphi_S$ is an object of $\mfk{C}$. For two objects $f:A \to B$ and $f’:A \to B’$, a morphism $g \in \operatorname{Mor}(f,f’)$ is a homomorphism

such that $f’=g \circ f$. So here comes the question: what is the position of $\varphi_S$?

Let $\mfk{A}$ be a category. an object $P$ of $\mfk{A}$ is called universally attracting if there exists a unique morphism of each object of $\mfk{A}$ into $P$, an is called universally repelling if for every object of $\mfk{A}$ there exists a unique morphism of $P$ into this object. Therefore we have the answer for $\mfk{C}$.

(Proposition 9) $\varphi_S$ is a universally repelling object in $\mfk{C}$.

Principal and factorial ring

An ideal $\mfk{o} \in A$ is said to be principal if there exists some $a \in A$ such that $Aa = \mfk{o}$. For example for $\mb{Z}$, the ideal

is principal and we may write $2\mb{Z}$. If every ideal of a commutative ring $A$ is principal, we say $A$ is principal. Further we say $A$ is a PID if $A$ is also an integral domain (entire). When it comes to ring of fractions, we also have the following proposition:

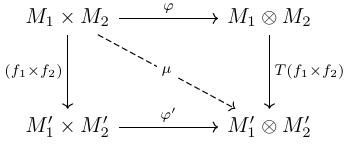

(Proposition 10) Let $A$ be a principal ring and $S$ a multiplicatively closed subset with $0 \notin S$, then $S^{-1}A$ is principal as well.

Proof. Let $I \subset S^{-1}A$ be an ideal. If $a \in S$ where $a/s \in I$, then we are done since then $(s/a)(a/s) = 1/1 \in I$, which implies $I=S^{-1}A$ itself, hence we shall assume $a \notin S$ for all $a/s \in I$. But for $a/s \in I$ we also have $(a/s)(s/1)=a/1 \in I$. Therefore $J=\varphi_S^{-1}(I)$ is not empty. $J$ is an ideal of $A$ since for $a \in A$ and $b \in J$, we have $\varphi_S(ab) =ab/1=(a/1)(b/1) \in I$ which implies $ab \in J$. But since $A$ is principal, there exists some $a$ such that $Aa = J$. We shall discuss the relation between $S^{-1}A(a/1)$ and $I$. For any $(c/u)(a/1)=ca/u \in S^{-1}A(a/1)$, clearly we have $ca/u \in I$, hence $S^{-1}A(a/1)\subset I$. On the other hand, for $c/u \in I$, we see $c/1=(c/u)(u/1) \in I$, hence $c \in J$, and there exists some $b \in A$ such that $c = ba$, which gives $c/u=ba/u=(b/u)(a/1) \in I$. Hence $I \subset S^{-1}A(a/1)$, and we have finally proved that $I = S^{-1}A(a/1)$. $\square$

As an immediate corollary, if $A_\mfk{p}$ is the localization of $A$ at $\mfk{p}$, and if $A$ is principal, then $A_\mfk{p}$ is principal as well. Next we go through another kind of rings. A ring is called factorial (or a unique factorization ring or UFD) if it is entire and if every non-zero element has a unique factorization into irreducible elements. An element $a \neq 0$ is called irreducible if it is not a unit and whenever $a=bc$, then either $b$ or $c$ is a unit. For all non-zero elements in a factorial ring, we have

where $u$ is a unit) (invertible).

In fact, every PID is a UFD (proof here). Irreducible elements in a factorial ring is called prime elements or simply prime (take $\mathbb{Z}$ and prime numbers as an example). Indeed, if $A$ is a factorial ring and $p$ a prime element, then $Ap$ is a prime ideal. But we are more interested in the ring of fractions of a factorial ring.

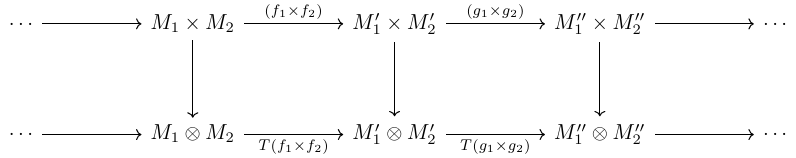

(Proposition 11) Let $A$ be a factorial ring and $S$ a multiplicatively closed subset with $0 \notin S$, then $S^{-1}A$ is factorial.

Proof. Pick $a/s \in S^{-1}A$. Since $A$ is factorial, we have $a=up_1 \cdots p_k$ where $p_i$ are primes and $u$ is a unit. But we have no idea what are irreducible elements of $S^{-1}A$. Naturally our first attack is $p_i/1$. And we have no need to restrict ourselves to $p_i$, we should work on all primes of $A$. Suppose $p$ is a prime of $A$. If $p \in S$, then $p/1 \in S$ is a unit, not prime. If $Ap \cap S \neq \varnothing$, then $rp \in S$ for some $r \in A$. But then

again $p/1$ is a unit, not prime. Finally if $Ap \cap S = \varnothing$, then $p/1$ is prime in $S^{-1}A$. For any

we see $ab=stp \not\in S$. But this also gives $ab \in Ap$ which is a prime ideal, hence we can assume $a \in Ap$ and write $a=rp$ for some $r \in A$. With this expansion we get

Hence $b/t$ is a unit, $p/1$ is a prime.

Conversely, suppose $a/s$ is irreducible in $S^{-1}A$. Since $A$ is factorial, we may write $a=u\prod_{i}p_i$. $a$ cannot be an element of $S$ since $a/s$ is not a unit. We write

We see there is some $v \in A$ such that $uv=1$ and accordingly $(u/1)(v/1)=uv/1=1/1$, hence $u/1$ is a unit. We claim that there exist a unique $p_k$ such that $1 \leq k \leq n$ and $Ap \cap S = \varnothing$. If not exists, then all $p_j/1$ are units. If both $p_{k}$ and $p_{k’}$ satisfy the requirement and $p_k \neq p_k’$, then we can write $a/s$ as

Neither the one in curly bracket nor $p_{k’}/1$ is unit, contradicting the fact that $a/s$ is irreducible. Next we show that $a/s=p_k/1$. For simplicity we write

Note $a/s = bp_k/s = (b/s)(p_k/1)$. Since $a/s$ is irreducible, $p_k/1$ is not a unit, we conclude that $b/s$ is a unit. We are done for the study of irreducible elements of $S^{-1}A$: it is of the form $p/1$ (up to a unit) where $p$ is prime in $A$ and $Ap \cap S = \varnothing$.

Now we are close to the fact that $S^{-1}A$ is also factorial. For any $a/s \in S^{-1}A$, we have an expansion

Let $p’_1,p’_2,\cdots,p’_j$ be those whose generated prime ideal has nontrivial intersection with $S$, then $p’_1/1, p’_2/1,\cdots,p’_j/1$ are units of $S^{-1}A$. Let $q_1,q_2,\cdots,q_k$ be other $p_i$’s, then $q_1/1,q_2/1,\cdots,q_k/1$ are irreducible in $S^{-1}A$. This gives

Hence $S^{-1}A$ is factorial as well. $\square$

We finish the whole post by a comprehensive proposition:

(Proposition 12) Let $A$ be a factorial ring and $p$ a prime element, $\mfk{p}=Ap$. The localization of $A$ at $\mfk{p}$ is principal.

Proof. For $a/s \in S^{-1}A$, we see $p$ does not divide $s$ since if $s = rp$ for some $r \in A$, then $s \in \mfk{p}$, contradicting the fact that $S = A \setminus \mfk{p}$. Since $A$ is factorial, we may write $a = cp^n$ for some $n \geq 0$ and $p$ does not divide $c$ as well (which gives $c \in S$. Hence $a/s = (c/s)(p^n/1)$. Note $(c/s)(s/c)=1/1$ and therefore $c/s$ is a unit. For every $a/s \in S^{-1}A$ we may write it as

where $u$ is a unit of $S^{-1}A$.

Let $I$ be any ideal in $S^{-1}A$, and

Let’s discuss the relation between $S^{-1}A(p^m/1)$ and $I$. First we see $S^{-1}A(p^m/1)=S^{-1}A(up^m/1)$ since if $v$ is the inverse of $u$, we get

Any element of $S^{-1}A(up^m/1)$ is of the form

Since $up^m/1 \in I$, we see $vup^{m+k}/1 \in I$ as well, hence $S^{-1}A(up^m/1) \subset I$. On the other hand, any element of $I$ is of the form $wup^{m+n}/1=w(p^n/1)u(p^m/1)$ where $w$ is a unit and $n \geq 0$. This shows that $vup^{m+n}/1 \in S^{-1}A(up^m/1)$. Hence $S^{-1}A(p^m/1)=S^{-1}A(up^m/1)=I$ as we wanted. $\square$