The ring of real trigonometric polynomials

The ring

Throughout we consider the polynomial ring

This ring has a lot of non-trivial properties which give us a good chance to study commutative ring theory.

The ring of real trigonometric polynomials

Throughout we consider the polynomial ring

This ring has a lot of non-trivial properties which give us a good chance to study commutative ring theory.

Characters in Analysis and Algebra

Dedekind Domain and Properties in an Elementary Approach

You can find contents about Dedekind domain (or Dedekind ring) in almost all algebraic number theory books. But many properties can be proved inside ring theory. I hope you can find the solution you need in this post, and this post will not go further than elementary ring theory. With that being said, you are assumed to have enough knowledge of ring and ring of fractions (this post serves well), but not too much mathematics maturity is assumed (at the very least you are assumed to be familiar with terminologies in the linked post).$\def\mb{\mathbb}$ $\def\mfk{\mathfrak}$

There are several ways to define Dedekind domain since there are several equivalent statements of it. We will start from the one based on ring of fractions. As a friendly reminder, $\mb{Z}$ or any principal integral domain is already a Dedekind domain. In fact Dedekind domain may be viewed as a generalization of principal integral domain.

Let $\mfk{o}$ be an integral domain (a.k.a. entire ring), and $K$ be its quotient field. A Dedekind domain is an integral domain $\mfk{o}$ such that the fractional ideals form a group under multiplication. Let’s have a breakdown. By a fractional ideal $\mfk{a}$ we mean a nontrivial additive subgroup of $K$ such that

What does the group look like? As you may guess, the unit element is $\mfk{o}$. For a fractional ideal $\mfk{a}$, we have the inverse to be another fractional ideal $\mfk{b}$ such that $\mfk{ab}=\mfk{ba}=\mfk{o}$. Note we regard $\mfk{o}$ as a subring of $K$. For $a \in \mfk{o}$, we treat it as $a/1 \in K$. This makes sense because the map $i:a \mapsto a/1$ is injective. For the existence of $c$, you may consider it as a restriction that the ‘denominator’ is bounded. Alternatively, we say that fractional ideal of $K$ is a finitely generated $\mfk{o}$-submodule of $K$. But in this post it is not assumed that you have learned module theory.

Let’s take $\mb{Z}$ as an example. The quotient field of $\mb{Z}$ is $\mb{Q}$. We have a fractional ideal $P$ where all elements are of the type $\frac{np}{2}$ with $p$ prime and $n \in \mb{Z}$. Then indeed we have $\mb{Z}P=P$. On the other hand, take $2 \in \mb{Z}$, we have $2P \subset \mb{Z}$. For its inverse we can take a fractional ideal $Q$ where all elements are of the type $\frac{2n}{p}$. As proved in algebraic number theory, the ring of algebraic integers in a number field is a Dedekind domain.

Before we go on we need to clarify the definition of ideal multiplication. Let $\mfk{a}$ and $\mfk{b}$ be two ideals, we define $\mfk{ab}$ to be the set of all sums

where $x_i \in \mfk{a}$ and $y_i \in \mfk{b}$. Here the number $n$ means finite but is not fixed. Alternatively we cay say $\mfk{ab}$ contains all finite sum of products of $\mfk{a}$ and $\mfk{b}$.

(Proposition 1) A Dedekind domain $\mfk{o}$ is Noetherian.

By Noetherian ring we mean that every ideal in a ring is finitely generated. Precisely, we will prove that for every ideal $\mfk{a} \subset \mfk{o}$ there are $a_1,a_2,\cdots,a_n \in \mfk{a}$ such that, for every $r \in \mfk{a}$, we have an expression

Also note that any ideal $\mfk{a} \subset \mfk{o}$ can be viewed as a fractional ideal.

Proof. Since $\mfk{a}$ is an ideal of $\mfk{o}$, let $K$ be the quotient field of $\mfk{o}$, we see since $\mfk{oa}=\mfk{a}$, we may also view $\mfk{a}$ as a fractional ideal. Since $\mfk{o}$ is a Dedekind domain, and fractional ideals of $\mfk{a}$ is a group, there is an fractional ideal $\mfk{b}$ such that $\mfk{ab}=\mfk{ba}=\mfk{o}$. Since $1 \in \mfk{o}$, we may say that there exists some $a_1,a_2,\cdots, a_n \in \mfk{a}$ and $b_1,b_2,\cdots,b_n \in \mfk{o}$ such that $\sum_{i = 1 }^{n}a_ib_i=1$. For any $r \in \mfk{a}$, we have an expression

On the other hand, any element of the form $c_1a_1+c_2a_2+\cdots+c_na_n$, by definition, is an element of $\mfk{a}$. $\blacksquare$

From now on, the inverse of an fractional ideal $\mfk{a}$ will be written like $\mfk{a}^{-1}$.

(Proposition 2) For ideals $\mfk{a},\mfk{b} \subset \mfk{o}$, $\mfk{b}\subset\mfk{a}$ if and only if there exists some $\mfk{c}$ such that $\mfk{ac}=\mfk{b}$ (or we simply say $\mfk{a}|\mfk{b}$)

Proof. If $\mfk{b}=\mfk{ac}$, simply note that $\mfk{ac} \subset \mfk{a} \cap \mfk{c} \subset \mfk{a}$. For the converse, suppose that $a \supset \mfk{b}$, then $\mfk{c}=\mfk{a}^{-1}\mfk{b}$ is an ideal of $\mfk{o}$ since $\mfk{c}=\mfk{a}^{-1}\mfk{b} \subset \mfk{a}^{-1}\mfk{a}=\mfk{o}$, hence we may write $\mfk{b}=\mfk{a}\mfk{c}$. $\blacksquare$

(Proposition 3) If $\mfk{a}$ is an ideal of $\mfk{o}$, then there are prime ideals $\mfk{p}_1,\mfk{p}_2,\cdots,\mfk{p}_n$ such that

Proof. For this problem we use a classical technique: contradiction on maximality. Suppose this is not true, let $\mfk{A}$ be the set of ideals of $\mfk{o}$ that cannot be written as the product of prime ideals. By assumption $\mfk{U}$ is non-empty. Since as we have proved, $\mfk{o}$ is Noetherian, we can pick a maximal element $\mfk{a}$ of $\mfk{A}$ with respect to inclusion. If $\mfk{a}$ is maximal, then since all maximal ideals are prime, $\mfk{a}$ itself is prime as well. If $\mfk{a}$ is properly contained in an ideal $\mfk{m}$, then we write $\mfk{a}=\mfk{m}\mfk{m}^{-1}\mfk{a}$. We have $\mfk{m}^{-1}\mfk{a} \supsetneq \mfk{a}$ since if not, we have $\mfk{a}=\mfk{ma}$, which implies that $\mfk{m}=\mfk{o}$. But by maximality, $\mfk{m}^{-1}\mfk{a}\not\in\mfk{U}$, hence it can be written as a product of prime ideals. But $\mfk{m}$ is prime as well, we have a prime factorization for $\mfk{a}$, contradicting the definition of $\mfk{U}$.

Next we show unicity up to a permutation. If

since $\mfk{p}_1\mfk{p}_2\cdots\mfk{p}_k\subset\mfk{p}_1$ and $\mfk{p}_1$ is prime, we may assume that $\mfk{q}_1 \subset \mfk{p}_1$. By the property of fractional ideal we have $\mfk{q}_1=\mfk{p}_1\mfk{r}_1$ for some fractional ideal $\mfk{r}_1$. However we also have $\mfk{q}_1 \subset \mfk{r}_1$. Since $\mfk{q}_1$ is prime, we either have $\mfk{q}_1 \supset \mfk{p}_1$ or $\mfk{q}_1 \supset \mfk{r}_1$. In the former case we get $\mfk{p}_1=\mfk{q}_1$, and we finish the proof by continuing inductively. In the latter case we have $\mfk{r}_1=\mfk{q}_1=\mfk{p}_1\mfk{q}_1$, which shows that $\mfk{p}_1=\mfk{o}$, which is impossible. $\blacksquare$

(Proposition 4) Every nontrivial prime ideal $\mfk{p}$ is maximal.

Proof. Let $\mfk{m}$ be an maximal ideal containing $\mfk{p}$. By proposition 2 we have some $\mfk{c}$ such that $\mfk{p}=\mfk{mc}$. If $\mfk{m} \neq \mfk{p}$, then $\mfk{c} \neq \mfk{o}$, and we may write $\mfk{c}=\mfk{p}_1\cdots\mfk{p}_n$, hence $\mfk{p}=\mfk{m}\mfk{p}_1\cdots\mfk{p}_n$, which is a prime factorisation, contradicting the fact that $\mfk{p}$ has a unique prime factorisation, which is $\mfk{p}$ itself. Hence any maximal ideal containing $\mfk{p}$ is $\mfk{p}$ itself. $\blacksquare$

(Proposition 5) Suppose the Dedekind domain $\mfk{o}$ only contains one prime (and maximal) ideal $\mfk{p}$, let $t \in \mfk{p}$ and $t \not\in \mfk{p}^2$, then $\mfk{p}$ is generated by $t$.

Proof. Let $\mfk{t}$ be the ideal generated by $t$. By proposition 3 we have a factorisation

for some $n$ since $\mfk{o}$ contains only one prime ideal. According to proposition 2, if $n \geq 3$, we write $\mfk{p}^n=\mfk{p}^2\mfk{p}^{n-2}$, we see $\mfk{p}^2 \supset \mfk{p}^n$. But this is impossible since if so we have $t \in \mfk{p}^n \subset \mfk{p}^2$ contradicting our assumption. Hence $0<n<3$. But If $n=2$ we have $t \in \mfk{p}^2$ which is also not possible. So $\mfk{t}=\mfk{p}$ provided that such $t$ exists.

For the existence of $t$, note if not, then for all $t \in \mfk{p}$ we have $t \in \mfk{p}^2$, hence $\mfk{p} \subset \mfk{p}^2$. On the other hand we already have $\mfk{p}^2 = \mfk{p}\mfk{p}$, which implies that $\mfk{p}^2 \subset \mfk{p}$ (proposition 2), hence $\mfk{p}^2=\mfk{p}$, contradicting proposition 3. Hence such $t$ exists and our proof is finished. $\blacksquare$

In fact there is another equivalent definition of Dedekind domain:

A domain $\mfk{o}$ is Dedekind if and only if

- $\mfk{o}$ is Noetherian.

- $\mfk{o}$ is integrally closed.

- $\mfk{o}$ has Krull dimension $1$ (i.e. every non-zero prime ideals are maximal).

This is equivalent to say that faction ideals form a group and is frequently used by mathematicians as well. But we need some more advanced techniques to establish the equivalence. Presumably there will be a post about this in the future.

Tensor Product as a Universal Object (Category Theory & Module Theory)

It is quite often to see direct sum or direct product of groups, modules, vector spaces. Indeed, for modules over a ring $R$, direct products are also direct products of $R$-modules as well. On the other hand, the direct sum is a coproduct in the category of $R$-modules.

But what about tensor products? It is some different kind of product but how? Is it related to direct product? How do we write a tensor product down? We need to solve this question but it is not a good idea to dig into numeric works.

From now on, let $R$ be a commutative ring, and $M_1,\cdots,M_n$ are $R$-modules. Mainly we work on $M_1$ and $M_2$, i.e. $M_1 \times M_2$ and $M_1 \otimes M_2$. For $n$-multilinear one, simply replace $M_1\times M_2$ with $M_1 \times M_2 \times \cdots \times M_n$ and $M_1 \otimes M_2$ with $M_1 \otimes \cdots \otimes M_n$. The only difference is the change of symbols.

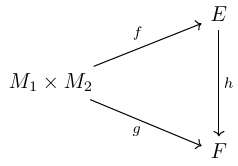

The bilinear maps of $M_1 \times M_2$ determines a category, say $BL(M_1 \times M_2)$ or we simply write $BL$. For an object $(f,E)$ in this category we have $f: M_1 \times M_2 \to E$ as a bilinear map and $E$ as a $R$-module of course. For two objects $(f,E)$ and $(g,F)$, we define the morphism between them as a linear function making the following diagram commutative: $\def\mor{\operatorname{Mor}}$

This indeed makes $BL$ a category. If we define the morphisms from $(f,E)$ to $(g,F)$ by $\mor(f,g)$ (for simplicity we omit $E$ and $F$ since they are already determined by $f$ and $g$) we see the composition

satisfy all axioms for a category:

CAT 1 Two sets $\mor(f,g)$ and $\mor(f’,g’)$ are disjoint unless $f=f’$ and $g=g’$, in which case they are equal. If $g \neq g’$ but $f = f’$ for example, for any $h \in \mor(f,g)$, we have $g = h \circ f = h \circ f’ \neq g’$, hence $h \notin \mor(f,g)$. Other cases can be verified in the same fashion.

CAT 2 The existence of identity morphism. For any $(f,E) \in BL$, we simply take the identity map $i:E \to E$. For $h \in \mor(f,g)$, we see $g = h \circ f = h \circ i \circ f$. For $h’ \in \mor(g,f)$, we see $f = h’ \circ g = i \circ h’ \circ g$.

CAT 3 The law of composition is associative when defined.

There we have a category. But what about the tensor product? It is defined to be initial (or universally repelling) object in this category. Let’s denote this object by $(\varphi,M_1 \otimes M_2)$.

For any $(f,E) \in BL$, we have a unique morphism (which is a module homomorphism as well) $h:(\varphi,M_1 \otimes M_2) \to (f,E)$. For $x \in M_1$ and $y \in M_2$, we write $\varphi(x,y)=x \otimes y$. We call the existence of $h$ the universal property of $(\varphi,M_1 \otimes M_2)$.

The tensor product is unique up to isomorphism. That is, if both $(f,E)$ and $(g,F)$ are tensor products, then $E \simeq F$ in the sense of module isomorphism. Indeed, let $h \in \mor(f,g)$ and $h’ \in \mor(g,h)$ be the unique morphisms respectively, we see $g = h \circ f$, $f = h’ \circ g$, and therefore

Hence $h \circ h’$ is the identity of $(g,F)$ and $h’ \circ h$ is the identity of $(f,E)$. This gives $E \simeq F$.

What do we get so far? For any modules that is connected to $M_1 \times M_2$ with a bilinear map, the tensor product $M_1 \oplus M_2$ of $M_1$ and $M_2$, is always able to be connected to that module with a unique module homomorphism. What if there are more than one tensor products? Never mind. All tensor products are isomorphic.

But wait, does this definition make sense? Does this product even exist? How can we study the tensor product of two modules if we cannot even write it down? So far we are only working on arrows, and we don’t know what is happening inside an module. It is not a good idea to waste our time on ‘nonsenses’. We can look into it in an natural way. Indeed, if we can find a module satisfying the property we want, then we are done, since this can represent the tensor product under any circumstances. Again, all tensor products of $M_1$ and $M_2$ are isomorphic.

Let $M$ be the free module generated by the set of all tuples $(x_1,x_2)$ where $x_1 \in M_1$ and $x_2 \in M_2$, and $N$ be the submodule generated by tuples of the following types:

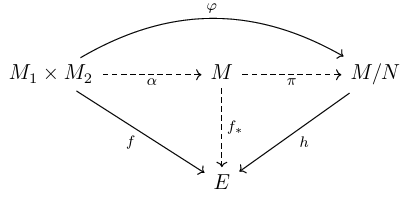

First we have a inclusion map $\alpha=M_1 \times M_2 \to M$ and the canonical map $\pi:M \to M/N$. We claim that $(\pi \circ \alpha, M/N)$ is exactly what we want. But before that, we need to explain why we define such a $N$.

The reason is quite simple: We want to make sure that $\varphi=\pi \circ \alpha$ is bilinear. For example, we have $\varphi(x_1+x_1’,x_2)=\varphi(x_1,x_2)+\varphi(x_1’,x_2)$ due to our construction of $N$ (other relations follow in the same manner). This can be verified group-theoretically. Note

but

Hence we get the identity we want. For this reason we can write

Sometimes to avoid confusion people may also write $x_1 \otimes_R x_2$ if both $M_1$ and $M_2$ are $R$-modules. But before that we have to verify that this is indeed the tensor product. To verify this, all we need is the universal property of free modules.

By the universal property of $M$, for any $(f,E) \in BL$, we have a induced map $f_\ast$ making the diagram inside commutative. However, for elements in $N$, we see $f_\ast$ takes value $0$, since $f_\ast$ is a bilinear map already. We finish our work by taking $h[(x,y)+N] = f_\ast(x,y)$. This is the map induced by $f_\ast$, following the property of factor module.

For coprime integers $m,n>1$, we have $\def\mb{\mathbb}$

where $O$ means that the module only contains $0$ and $\mb{Z}/m\mb{Z}$ is considered as a module over $\mb{Z}$ for $m>1$. This suggests that, the tensor product of two modules is not necessarily ‘bigger’ than its components. Let’s see why this is trivial.

Note that for $x \in \mb{Z}/m\mb{Z}$ and $y \in \mb{Z}/n\mb{Z}$, we have

since, for example, $mx = 0$ for $x \in \mb{Z}/m\mb{Z}$ and $\varphi(0,y)=0$. If you have trouble understanding why $\varphi(0,y)=0$, just note that the submodule $N$ in our construction contains elements generated by $(0x,y)-0(x,y)$ already.

By Bézout’s identity, for any $x \otimes y$, we see there are $a$ and $b$ such that $am+bn=1$, and therefore

Hence the tensor product is trivial. This example gives us a lot of inspiration. For example, what if $m$ and $n$ are not necessarily coprime, say $\gcd(m,n)=d$? By Bézout’s identity still we have

This inspires us to study the connection between $\mb{Z}/m\mb{Z} \otimes \mb{Z}/n\mb{Z}$ and $\mb{Z}/d\mb{Z}$. By the universal property, for the bilinear map $f:\mb{Z}/m\mb{Z} \times \mb{Z}/n\mb{Z} \to \mb{Z}/d\mb{Z}$ defined by

(there should be no difficulty to verify that $f$ is well-defined), there exists a unique morphism $h:\mb{Z}/m\mb{Z} \otimes \mb{Z}/n\mb{Z} \to \mb{Z}/d\mb{Z}$ such that

Next we show that it has a natural inverse defined by

Taking $a’ = a+kd$, we show that $g(a+d\mb{Z})=g(a’+\mb{Z})$, that is, we need to show that

By Bézout’s identity, there exists some $r,s$ such that $rm+sn=d$. Hence $a’ = a + ksn+krm$, which gives

since

So $g$ is well-defined. Next we show that this is the inverse. Firstly

Secondly,

Hence $g = h^{-1}$ and we can say

If $m,n$ are coprime, then $\gcd(m,n)=1$, hence $\mb{Z}/m\mb{Z} \otimes \mb{Z}/n\mb{Z} \simeq \mb{Z}/\mb{Z}$ is trivial. More interestingly, $\mb{Z}/m\mb{Z}\otimes \mb{Z}/m\mb{Z}=\mb{Z}/m\mb{Z}$. But this elegant identity raised other questions. First of all, $\gcd(m,n)=\gcd(n,m)$, which implies

Further, for $m,n,r >1$, we have $\gcd(\gcd(m,n),r)=\gcd(m,\gcd(n,r))=\gcd(m,n,r)$, which gives

hence

Hence for modules of the form $\mb{Z}/m\mb{Z}$, we see the tensor product operation is associative and commutative up to isomorphism. Does this hold for all modules? The universal property answers this question affirmatively. From now on we will be keep using the universal property. Make sure that you have got the point already.

Let $M_1,M_2,M_3$ be $R$-modules, then there exists a unique isomorphism

for $x \in M_1$, $y \in M_2$, $z \in M_3$.

Proof. Consider the map

where $x \in M_1$. Since $(\cdot\otimes\cdot)$ is bilinear, we see $\lambda_x$ is bilinear for all $x \in M_1$. Hence by the universal property there exists a unique map of the tensor product:

Next we have the map

which is bilinear as well. Again by the universal property we have a unique map

This is indeed the isomorphism we want. The reverse is obtained by reversing the process. For the bilinear map

we get a unique map

Then from the bilinear map

we get the unique map, which is actually the reverse of $\overline{\mu}_x$:

Hence the two tensor products are isomorphic. $\square$

Let $M_1$ and $M_2$ be $R$-modules, then there exists a unique isomorphism

where $x_1 \in M_1$ and $x_2 \in M_2$.

Proof. The map

is bilinear and gives us a unique map

given by $x \otimes y \mapsto y \otimes x$. Symmetrically, the map $\lambda’:M_2 \times M_1 \to M_1 \otimes M_2$ gives us a unique map

which is the inverse of $\overline{\lambda}$. $\square$

Therefore, we may view the set of all $R$-modules as a commutative semigroup with the binary operation $\otimes$.

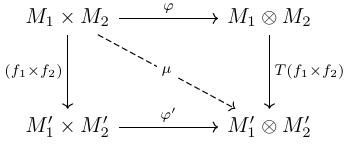

Consider commutative diagram:

Where $f_i:M_i \to M_i’$ are some module-homomorphism. What do we want here? On the left hand, we see $f_1 \times f_2$ sends $(x_1,x_2)$ to $(f_1(x_1),f_2(x_2))$, which is quite natural. The question is, is there a natural map sending $x_1 \otimes x_2$ to $f_1(x_1) \otimes f_2(x_2)$? This is what we want from the right hand. We know $T(f_1 \times f_2)$ exists, since we have a bilinear map by $\mu = \varphi’ \circ (f_1\times f_2)$. So for $(x_1,x_2) \in M_1 \times M_2$, we have $T(f_1 \times f_2)(x_1 \otimes x_2) = \varphi’ \circ (f_1 \times f_2)(x_1,x_2) = f_1(x_1) \otimes f_2(x_2)$ as what we want.

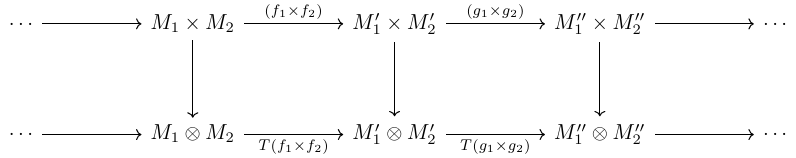

But $T$ in this graph has more interesting properties. First of all, if $M_1 = M_1’$ an $M_2 = M_2’$, both $f_1$ and $f_2$ are identity maps, then we see $T(f_1 \times f_2)$ is the identity as well. Next, consider the following chain

We can make it a double chain:

It is obvious that $(g_1 \circ f_1 \times g_2 \circ f_2)=(g_1 \times g_2) \circ (f_1 \times f_2)$, which also gives

Hence we can say $T$ is functorial. Sometimes for simplicity we also write $T(f_1,f_2)$ or simply $f_1 \otimes f_2$, as it sends $x_1 \otimes x_2$ to $f_1(x_1) \otimes f_2(x_2)$. Indeed it can be viewed as a map

Why Does a Vector Space Have a Basis (Module Theory)

First we recall some backgrounds. Suppose $A$ is a ring with multiplicative identity $1_A$. A left module of $A$ is an additive abelian group $(M,+)$, together with an ring operation $A \times M \to M$ such that

for $x,y \in M$ and $a,b \in A$. As a corollary, we see $(0_A+0_A)x=0_Ax=0_Ax+0_Ax$, which shows $0_Ax=0_M$ for all $x \in M$. On the other hand, $a(x-x)=0_M$ which implies $a(-x)=-(ax)$. We can also define right $A$-modules but we are not discussing them here.

Let $S$ be a subset of $M$. We say $S$ is a basis of $M$ if $S$ generates $M$ and $S$ is linearly independent. That is, for all $m \in M$, we can pick $s_1,\cdots,s_n \in S$ and $a_1,\cdots,a_n \in A$ such that

and, for any $s_1,\cdots,s_n \in S$, we have

Note this also shows that $0_M\notin S$ (what happens if $0_M \in S$?). We say $M$ is free if it has a basis. The case when $M$ or $A$ is trivial is excluded.

If $A$ is a field, then $M$ is called a vector space, which has no difference from the one we learn in linear algebra and functional analysis. Mathematicians in functional analysis may be interested in the cardinality of a vector space, for example, when a vector space is of finite dimension, or when the basis is countable. But the basis does not come from nowhere. In fact we can prove that vector spaces have basis, but modules are not so lucky. $\def\mb{\mathbb}$

First of all let’s consider the cyclic group $\mb{Z}/n\mb{Z}$ for $n \geq 2$. If we define

which is actually $m$ copies of an element, then we get a module, which will be denoted by $M$. For any $x=k+n\mb{Z} \in M$, we see $nk+n\mb{Z}=0_M$. Therefore for any subset $S \subset M$, if $x_1,\cdots,x_k \in M$, we have

which gives the fact that $M$ has no basis. In fact this can be generalized further. If $A$ is a ring but not a field, let $I$ be a nontrivial proper ideal, then $A/I$ is a module that has no basis.

Following $\mb{Z}/n\mb{Z}$ we also have another example on finite order. Indeed, any finite abelian group is not free as a module over $\mb{Z}$. More generally,

Let $G$ be a abelian group, and $G_{tor}$ be its torsion subgroup. If $G_{tor}$ is non-trival, then $G$ cannot be a free module over $\mb{Z}$.

Next we shall take a look at infinite rings. Let $F[X]$ be the polynomial ring over a field $F$ and $F’[X]$ be the polynomial sub-ring that have coefficient of $X$ equal to $0$. Then $F[X]$ is a $F’[X]$-module. However it is not free.

Suppose we have a basis $S$ of $F[X]$, then we claim that $|S|>1$. If $|S|=1$, say $P \in S$, then $P$ cannot generate $F[X]$ since if $P$ is constant then we cannot generate a polynomial contains $X$ with power $1$; If $P$ is not constant, then the constant polynomial cannot be generate. Hence $S$ contains at least two polynomials, say $P_1 \neq 0$ and $P_2 \neq 0$. However, note $-X^2P_1 \in F’[X]$ and $X^2P_2 \in F’[X]$, which gives

Hence $S$ cannot be a basis.

I hope those examples have convinced you that basis is not a universal thing. We are going to prove that every vector space has a basis. More precisely,

Let $V$ be a nontrivial vector space over a field $K$. Let $\Gamma$ be a set of generators of $V$ over $K$ and $S \subset \Gamma$ is a subset which is linearly independent, then there exists a basis of $V$ such that $S \subset B \subset \Gamma$.

Note we can always find such $\Gamma$ and $S$. For the extreme condition, we can pick $\Gamma=V$ and $S$ be a set containing any single non-zero element of $V$. Note this also gives that we can generate a basis by expanding any linearly independent set. The proof relies on a fact that every non-zero element in a field is invertible, and also, Zorn’s lemma. In fact, axiom of choice is equivalent to the statement that every vector has a set of basis. The converse can be found here. $\def\mfk{\mathfrak}$

Proof. Define

Then $\mfk{T}$ is not empty since it contains $S$. If $T_1 \subset T_2 \subset \cdots$ is a totally ordered chain in $\mfk{T}$, then $T=\bigcup_{i=1}^{\infty}T_i$ is again linearly independent and contains $S$. To show that $T$ is linearly independent, note that if $x_1,x_2,\cdots,x_n \in T$, we can find some $k_1,\cdots,k_n$ such that $x_i \in T_{k_i}$ for $i=1,2,\cdots,n$. If we pick $k = \max(k_1,\cdots,k_n)$, then

But we already know that $T_k$ is linearly independent, so $a_1x_1+\cdots+a_nx_n=0_V$ implies $a_1=\cdots=a_n=0_K$.

By Zorn’s lemma, let $B$ be the maximal element of $\mfk{T}$, then $B$ is also linearly independent since it is an element of $\mfk{T}$. Next we show that $B$ generates $V$. Suppose not, then we can pick some $x \in \Gamma$ that is not generated by $B$. Define $B’=B \cup \{x\}$, we see $B’$ is linearly independent as well, because if we pick $y_1,y_2,\cdots,y_n \in B$, and if

then if $b \neq 0$ we have

contradicting the assumption that $x$ is not generated by $B$. Hence $b=0_K$. However, we have proved that $B’$ is a linearly independent set containing $B$ and contained in $S$, contradicting the maximality of $B$ in $\mfk{T}$. Hence $B$ generates $V$. $\square$

Rings of Fractions and Localisation

Is perhaps the most important technical tools in commutative algebra. In this post we are covering definitions and simple properties. Also we restrict ourselves into ring theories and no further than that. Throughout, we let $A$ be a commutative ring. With extra effort we can also make it to non-commutative rings for some results but we are not doing that here.

In fact the construction of $\mathbb{Q}$ from $\mathbb{Z}$ has already been an example. For any $a \in \mathbb{Q}$, we have some $m,n \in \mathbb{Z}$ with $n \neq 0$ such that $a = \frac{m}{n}$. As a matter of notation we may also say an ordered pair $(m,n)$ determines $a$. Two ordered pairs $(m,n)$ and $(m’,n’)$ are equivalent if and only if

But we are only using the ring structure of $\mathbb{Z}$. So it is natural to think whether it is possible to generalize this process to all rings. But we are also using the fact that $\mathbb{Z}$ is an entire ring (or alternatively integral domain, they mean the same thing). However there is a way to generalize it. $\def\mfk{\mathfrak}$

(Definition 1) A multiplicatively closed subset $S \subset A$ is a set that $1 \in S$ and if $x,y \in S$, then $xy \in S$.

For example, for $\mathbb{Z}$ we have a multiplicatively closed subset

We can also insert $0$ here but it may produce some bad result. If $S$ is also an ideal then we must have $S=A$ so this is not very interesting. However the complement is interesting.

(Proposition 1) Suppose $A$ is a commutative ring such that $1 \neq 0$. Let $S$ be a multiplicatively closed set that does not contain $0$. Let $\mfk{p}$ be the maximal element of ideals contained in $A \setminus S$, then $\mfk{p}$ is prime.

Proof. Recall that $\mfk{p}$ is prime if for any $x,y \in A$ such that $xy \in \mfk{p}$, we have $x \in \mfk{p}$ or $y \in \mfk{p}$. But now we fix $x,y \in \mfk{p}^c$. Note we have a strictly bigger ideal $\mfk{q}_1=\mfk{p}+Ax$. Since $\mfk{p}$ is maximal in the ideals contained in $A \setminus S$, we see

Therefore there exist some $a \in A$ and $p \in \mfk{p}$ such that

Also, $\mfk{q}_2=\mfk{p}+Ay$ has nontrivial intersection with $S$ (due to the maximality of $\mfk{p}$), there exist some $a’ \in A$ and $p’ \in \mfk{p}$ such that

Since $S$ is closed under multiplication, we have

But since $\mfk{p}$ is an ideal, we see $pp’+p’ax+pa’y \in \mfk{p}$. Therefore we must have $xy \notin \mfk{p}$ since if not, $(p+ax)(p’+a’y) \in \mfk{p}$, which gives $\mfk{p} \cap S \neq \varnothing$, and this is impossible. $\square$

As a corollary, for an ideal $\mfk{p} \subset A$, if $A \setminus \mfk{p}$ is multiplicatively closed, then $\mfk{p}$ is prime. Conversely, if we are given a prime ideal $\mfk{p}$, then we also get a multiplicatively closed subset.

(Proposition 2) If $\mfk{p}$ is a prime ideal of $A$, then $S = A \setminus \mfk{p}$ is multiplicatively closed.

Proof. First $1 \in S$ since $\mfk{p} \neq A$. On the other hand, if $x,y \in S$ we see $xy \in S$ since $\mfk{p}$ is prime. $\square$

We define a equivalence relation on $A \times S$ as follows:

(Proposition 3) $\sim$ is an equivalence relation.

Proof. Since $(as-as)1=0$ while $1 \in S$, we see $(a,s) \sim (a,s)$. For being symmetric, note that

Finally, to show that it is transitive, suppose $(a,s) \sim (b,t)$ and $(b,t) \sim (c,u)$. There exist $u,v \in S$ such that

This gives $bsv=atv$ and $buw = ctw$, which implies

But $tvw \in S$ since $t,v,w \in S$ and $S$ is multiplicatively closed. Hence

$\square$

Let $a/s$ denote the equivalence class of $(a,s)$. Let $S^{-1}A$ denote the set of equivalence classes (it is not a good idea to write $A/S$ as it may coincide with the notation of factor group), and we put a ring structure on $S^{-1}A$ as follows:

There is no difference between this one and the one in elementary algebra. But first of all we need to show that $S^{-1}A$ indeed form a ring.

(Proposition 4) The addition and multiplication are well defined. Further, $S^{-1}A$ is a commutative ring with identity.

Proof. Suppose $(a,s) \sim (a’,s’)$ and $(b,t) \sim (b’,t’)$ we need to show that

or

There exists $u,v \in S$ such that

If we multiply the first equation by $vtt’$ and second equation by $uss’$, we see

which is exactly what we want.

On the other hand, we need to show that

That is,

Again, we have

Hence

Since $uv \in S$, we are done.

Next we show that $S^{-1}A$ has a ring structure. If $0 \in S$, then $S^{-1}A$ contains exactly one element $0/1$ since in this case, all pairs are equivalent:

We therefore only discuss the case when $0 \notin S$. First $0/1$ is the zero element with respect to addition since

On the other hand, we have the inverse $-a/s$:

$1/1$ is the unit with respect to multiplication:

Multiplication is associative since

Multiplication is commutative since

Finally distributivity.

Note $ab/cb=a/c$ since $(abc-abc)1=0$. $\square$ $\def\mb{\mathbb}$

First we consider the case when $A$ is entire. If $0 \in S$, then $S^{-1}A$ is trivial, which is not so interesting. However, provided that $0 \notin S$, we get some well-behaved result:

(Proposition 5) Let $A$ be an entire ring, and let $S$ be a multiplicatively closed subset of $A$ that does not contain $0$, then the natural map

is injective. Therefore it can be considered as a natural inclusion. Further, every element of $\varphi_S(S)$ is invertible.

Proof. Indeed, if $x/1=0/1$, then there exists $s \in S$ such that $xs=0$. Since $A$ is entire and $s \neq 0$, we see $x=0$, hence $\varphi_S$ is entire. For $s \in S$, we see $\varphi_S(s)=s/1$. However $(1/s)\varphi_S(s)=(1/s)(s/1)=s/s=1$. $\square$

Note since $A$ is entire we can also conclude that $S^{-1}A$ is entire. As a word of warning, the ring homomorphism $\varphi_S$ is not in general injective since, for example, when $0 \in S$, this map is the zero.

If we go further, making $S$ contain all non-zero element, we have:

(Proposition 6) If $A$ is entire and $S$ contains all non-zero elements of $A$, then $S^{-1}A$ is a field, called the quotient field or the field of fractions.

Proof. First we need to show that $S^{-1}A$ is entire. Suppose $(a/s)(b/t)=ab/st =0/1$ but $a/s \neq 0/1$, we see however

Since $A$ is entire, $b$ has to be $0$, which implies $b/t=0/1$. Second, if $a/s \neq 0/1$, we see $a \neq 0$ and therefore is in $S$, hence we’ve found the inverse $(a/s)^{-1}=s/a$. $\square$

In this case we can identify $A$ as a subset of $S^{-1}A$ and write $a/s=s^{-1}a$.

Let $A$ be a commutative ring, an let $S$ be the set of invertible elements of $A$. If $u \in S$, then there exists some $v \in S$ such that $uv=1$. We see $1 \in S$ and if $a,b \in S$, we have $ab \in S$ since $ab$ has an inverse as well. This set is frequently denoted by $A^\ast$, and is called the group of invertible elements of $A$. For example for $\mb{Z}$ we see $\mb{Z}^\ast$ consists of $-1$ and $1$. If $A$ is a field, then $A^\ast$ is the multiplicative group of non-zero elements of $A$. For example $\mb{Q}^\ast$ is the set of all rational numbers without $0$. For $A^\ast$ we have

If $A$ is a field, then $(A^\ast)^{-1}A \simeq A$.

Proof. Define

Then as we have already shown, $\varphi_S$ is injective. Secondly we show that $\varphi_S$ is surjective. For any $a/s \in (A^\ast)^{-1}A$, we see $as^{-1}/1 = a/s$. Therefore $\varphi_S(as^{-1})=a/s$ as is shown. $\square$

Now let’s see a concrete example. If $A$ is entire, then the polynomial ring $A[X]$ is entire. If $K = S^{-1}A$ is the quotient field of $A$, we can denote the quotient field of $A[X]$ as $K(X)$. Elements in $K(X)$ can be naturally called rational polynomials, and can be written as $f(X)/g(X)$ where $f,g \in A[X]$. For $b \in K$, we say a rational function $f/g$ is defined at $b$ if $g(b) \neq 0$. Naturally this process can be generalized to polynomials of $n$ variables.

We say a commutative ring $A$ is local if it has a unique maximal ideal. Let $\mfk{p}$ be a prime ideal of $A$, and $S = A \setminus \mfk{p}$, then $A_{\mfk{p}}=S^{-1}A$ is called the local ring of $A$ at $\mfk{p}$. Alternatively, we say the process of passing from $A$ to $A_\mfk{p}$ is localization at $\mfk{p}$. You will see it makes sense to call it localization:

(Proposition 7) $A_\mfk{p}$ is local. Precisely, the unique maximal ideal is

Note $I$ is indeed equal to $\mfk{p}A_\mfk{p}$.

Proof. First we show that $I$ is an ideal. For $b/t \in A_\mfk{p}$ and $a/s \in I$, we see

since $a \in \mfk{p}$ implies $ba \in \mfk{p}$. Next we show that $I$ is maximal, which is equivalent to show that $A_\mfk{p}/I$ is a field. For $b/t \notin I$, we have $b \in S$, hence it is legit to write $t/b$. This gives

Hence we have found the inverse.

Finally we show that $I$ is the unique maximal ideal. Let $J$ be another maximal ideal. Suppose $J \neq I$, then we can pick $m/n \in J \setminus I$. This gives $m \in S$ since if not $m \in \mfk{p}$ and then $m/n \in I$. But for $n/m \in A_\mfk{p}$ we have

This forces $J$ to be $A_\mfk{p}$ itself, contradicting the assumption that $J$ is a maximal ideal. Hence $I$ is unique. $\square$

Let $p$ be a prime number, and we take $A=\mb{Z}$ and $\mfk{p}=p\mb{Z}$. We now try to determine what do $A_\mfk{p}$ and $\mfk{p}A_\mfk{p}$ look like. First $S = A \setminus \mfk{p}$ is the set of all entire numbers prime to $p$. Therefore $A_\mfk{p}$ can be considered as the ring of all rational numbers $m/n$ where $n$ is prime to $p$, and $\mfk{p}A_\mfk{p}$ can be considered as the set of all rational numbers $kp/n$ where $k \in \mb{Z}$ and $n$ is prime to $p$.

$\mb{Z}$ is the simplest example of ring and $p\mb{Z}$ is the simplest example of prime ideal. And $A_\mfk{p}$ in this case shows what does localization do: $A$ is ‘expanded’ with respect to $\mfk{p}$. Every member of $A_\mfk{p}$ is related to $\mfk{p}$, and the maximal ideal is determined by $\mfk{p}$.

Let $k$ be a infinite field. Let $A=k[x_1,\cdots,x_n]$ where $x_i$ are independent indeterminates, $\mfk{p}$ a prime ideal in $A$. Then $A_\mfk{p}$ is the ring of all rational functions $f/g$ where $g \notin \mfk{p}$. We have already defined rational functions. But we can go further and demonstrate the prototype of the local rings which arise in algebraic geometry. Let $V$ be the variety defined by $\mfk{p}$, that is,

Then what about $A_\mfk{p}$? We see since for $f/g \in A_\mfk{p}$ we have $g \notin \mfk{p}$, therefore for $g(x)$ is not equal to $0$ almost everywhere on $V$. That is, $A_\mfk{p}$ can be identified with the ring of all rational functions on $k^n$ which are defined at almost all points of $V$. We call this the local ring of $k^n$ along the variety $V$.

Let $A$ be a ring and $S^{-1}A$ a ring of fractions, then we shall see that $\varphi_S:S \to S^{-1}A$ has a universal property.

(Proposition 8) Let $g:A \to B$ be a ring homomorphism such that $g(s)$ is invertible in $B$ for all $s \in S$, then there exists a unique homomorphism $h:S^{-1}A \to B$ such that $g = h \circ \varphi_S$.

Proof. For $a/s \in S^{-1}A$, define $h(a/s)=g(a)g(s)^{-1}$. It looks immediate but we shall show that this is what we are looking for and is unique.

Firstly we need to show that it is well defined. Suppose $a/s=a’/s’$, then there exists some $u \in S$ such that

Applying $g$ on both side yields

Since $g(x)$ is invertible for all $s \in S$, we therefore get

It is a homomorphism since

and

they are equal since

Next we show that $g=h \circ \varphi_S$. For $a \in A$, we have

Finally we show that $h$ is unique. Let $h’$ be a homomorphism satisfying the condition, then for $a \in A$ we have

For $s \in S$, we also have

Since $a/s = (a/1)(1/s)$ for all $a/s \in S^{-1}A$, we get

That is, $h’$ (or $h$) is totally determined by $g$. $\square$

Let’s restate it in the language of category theory (you can skip it if you have no idea what it is now). Let $\mfk{C}$ be the category whose objects are ring-homomorphisms

such that $f(s)$ is invertible for all $s \in S$. Then according to proposition 5, $\varphi_S$ is an object of $\mfk{C}$. For two objects $f:A \to B$ and $f’:A \to B’$, a morphism $g \in \operatorname{Mor}(f,f’)$ is a homomorphism

such that $f’=g \circ f$. So here comes the question: what is the position of $\varphi_S$?

Let $\mfk{A}$ be a category. an object $P$ of $\mfk{A}$ is called universally attracting if there exists a unique morphism of each object of $\mfk{A}$ into $P$, an is called universally repelling if for every object of $\mfk{A}$ there exists a unique morphism of $P$ into this object. Therefore we have the answer for $\mfk{C}$.

(Proposition 9) $\varphi_S$ is a universally repelling object in $\mfk{C}$.

An ideal $\mfk{o} \in A$ is said to be principal if there exists some $a \in A$ such that $Aa = \mfk{o}$. For example for $\mb{Z}$, the ideal

is principal and we may write $2\mb{Z}$. If every ideal of a commutative ring $A$ is principal, we say $A$ is principal. Further we say $A$ is a PID if $A$ is also an integral domain (entire). When it comes to ring of fractions, we also have the following proposition:

(Proposition 10) Let $A$ be a principal ring and $S$ a multiplicatively closed subset with $0 \notin S$, then $S^{-1}A$ is principal as well.

Proof. Let $I \subset S^{-1}A$ be an ideal. If $a \in S$ where $a/s \in I$, then we are done since then $(s/a)(a/s) = 1/1 \in I$, which implies $I=S^{-1}A$ itself, hence we shall assume $a \notin S$ for all $a/s \in I$. But for $a/s \in I$ we also have $(a/s)(s/1)=a/1 \in I$. Therefore $J=\varphi_S^{-1}(I)$ is not empty. $J$ is an ideal of $A$ since for $a \in A$ and $b \in J$, we have $\varphi_S(ab) =ab/1=(a/1)(b/1) \in I$ which implies $ab \in J$. But since $A$ is principal, there exists some $a$ such that $Aa = J$. We shall discuss the relation between $S^{-1}A(a/1)$ and $I$. For any $(c/u)(a/1)=ca/u \in S^{-1}A(a/1)$, clearly we have $ca/u \in I$, hence $S^{-1}A(a/1)\subset I$. On the other hand, for $c/u \in I$, we see $c/1=(c/u)(u/1) \in I$, hence $c \in J$, and there exists some $b \in A$ such that $c = ba$, which gives $c/u=ba/u=(b/u)(a/1) \in I$. Hence $I \subset S^{-1}A(a/1)$, and we have finally proved that $I = S^{-1}A(a/1)$. $\square$

As an immediate corollary, if $A_\mfk{p}$ is the localization of $A$ at $\mfk{p}$, and if $A$ is principal, then $A_\mfk{p}$ is principal as well. Next we go through another kind of rings. A ring is called factorial (or a unique factorization ring or UFD) if it is entire and if every non-zero element has a unique factorization into irreducible elements. An element $a \neq 0$ is called irreducible if it is not a unit and whenever $a=bc$, then either $b$ or $c$ is a unit. For all non-zero elements in a factorial ring, we have

where $u$ is a unit) (invertible).

In fact, every PID is a UFD (proof here). Irreducible elements in a factorial ring is called prime elements or simply prime (take $\mathbb{Z}$ and prime numbers as an example). Indeed, if $A$ is a factorial ring and $p$ a prime element, then $Ap$ is a prime ideal. But we are more interested in the ring of fractions of a factorial ring.

(Proposition 11) Let $A$ be a factorial ring and $S$ a multiplicatively closed subset with $0 \notin S$, then $S^{-1}A$ is factorial.

Proof. Pick $a/s \in S^{-1}A$. Since $A$ is factorial, we have $a=up_1 \cdots p_k$ where $p_i$ are primes and $u$ is a unit. But we have no idea what are irreducible elements of $S^{-1}A$. Naturally our first attack is $p_i/1$. And we have no need to restrict ourselves to $p_i$, we should work on all primes of $A$. Suppose $p$ is a prime of $A$. If $p \in S$, then $p/1 \in S$ is a unit, not prime. If $Ap \cap S \neq \varnothing$, then $rp \in S$ for some $r \in A$. But then

again $p/1$ is a unit, not prime. Finally if $Ap \cap S = \varnothing$, then $p/1$ is prime in $S^{-1}A$. For any

we see $ab=stp \not\in S$. But this also gives $ab \in Ap$ which is a prime ideal, hence we can assume $a \in Ap$ and write $a=rp$ for some $r \in A$. With this expansion we get

Hence $b/t$ is a unit, $p/1$ is a prime.

Conversely, suppose $a/s$ is irreducible in $S^{-1}A$. Since $A$ is factorial, we may write $a=u\prod_{i}p_i$. $a$ cannot be an element of $S$ since $a/s$ is not a unit. We write

We see there is some $v \in A$ such that $uv=1$ and accordingly $(u/1)(v/1)=uv/1=1/1$, hence $u/1$ is a unit. We claim that there exist a unique $p_k$ such that $1 \leq k \leq n$ and $Ap \cap S = \varnothing$. If not exists, then all $p_j/1$ are units. If both $p_{k}$ and $p_{k’}$ satisfy the requirement and $p_k \neq p_k’$, then we can write $a/s$ as

Neither the one in curly bracket nor $p_{k’}/1$ is unit, contradicting the fact that $a/s$ is irreducible. Next we show that $a/s=p_k/1$. For simplicity we write

Note $a/s = bp_k/s = (b/s)(p_k/1)$. Since $a/s$ is irreducible, $p_k/1$ is not a unit, we conclude that $b/s$ is a unit. We are done for the study of irreducible elements of $S^{-1}A$: it is of the form $p/1$ (up to a unit) where $p$ is prime in $A$ and $Ap \cap S = \varnothing$.

Now we are close to the fact that $S^{-1}A$ is also factorial. For any $a/s \in S^{-1}A$, we have an expansion

Let $p’_1,p’_2,\cdots,p’_j$ be those whose generated prime ideal has nontrivial intersection with $S$, then $p’_1/1, p’_2/1,\cdots,p’_j/1$ are units of $S^{-1}A$. Let $q_1,q_2,\cdots,q_k$ be other $p_i$’s, then $q_1/1,q_2/1,\cdots,q_k/1$ are irreducible in $S^{-1}A$. This gives

Hence $S^{-1}A$ is factorial as well. $\square$

We finish the whole post by a comprehensive proposition:

(Proposition 12) Let $A$ be a factorial ring and $p$ a prime element, $\mfk{p}=Ap$. The localization of $A$ at $\mfk{p}$ is principal.

Proof. For $a/s \in S^{-1}A$, we see $p$ does not divide $s$ since if $s = rp$ for some $r \in A$, then $s \in \mfk{p}$, contradicting the fact that $S = A \setminus \mfk{p}$. Since $A$ is factorial, we may write $a = cp^n$ for some $n \geq 0$ and $p$ does not divide $c$ as well (which gives $c \in S$. Hence $a/s = (c/s)(p^n/1)$. Note $(c/s)(s/c)=1/1$ and therefore $c/s$ is a unit. For every $a/s \in S^{-1}A$ we may write it as

where $u$ is a unit of $S^{-1}A$.

Let $I$ be any ideal in $S^{-1}A$, and

Let’s discuss the relation between $S^{-1}A(p^m/1)$ and $I$. First we see $S^{-1}A(p^m/1)=S^{-1}A(up^m/1)$ since if $v$ is the inverse of $u$, we get

Any element of $S^{-1}A(up^m/1)$ is of the form

Since $up^m/1 \in I$, we see $vup^{m+k}/1 \in I$ as well, hence $S^{-1}A(up^m/1) \subset I$. On the other hand, any element of $I$ is of the form $wup^{m+n}/1=w(p^n/1)u(p^m/1)$ where $w$ is a unit and $n \geq 0$. This shows that $vup^{m+n}/1 \in S^{-1}A(up^m/1)$. Hence $S^{-1}A(p^m/1)=S^{-1}A(up^m/1)=I$ as we wanted. $\square$

Let $A$ be an abelian group. Let $(e_i)_{i \in I}$ be a family of elements of $A$. We say that this family is a basis for $A$ if the family is not empty, and if every element of $A$ has a unique expression as a linear expression

where $x_i \in \mathbb{Z}$ and almost all $x_i$ are equal to $0$. This means that the sum is actually finite. An abelian group is said to be free if it has a basis. Alternatively, we may write $A$ as a direct sum by

Let $S$ be a set. Say we want to get a group out of this for some reason, so how? It is not a good idea to endow $S$ with a binary operation beforehead since overall $S$ is merely a set. We shall generate a group out of $S$ in the most freely way.

Let $\mathbb{Z}\langle S \rangle$ be the set of all maps $\varphi:S \to \mathbb{Z}$ such that, for only a finite number of $x \in S$, we have $\varphi(x) \neq 0$. For simplicity, we denote $k \cdot x$ to be some $\varphi_0 \in \mathbb{Z}\langle S \rangle$ such that $\varphi_0(x)=k$ but $\varphi_0(y) = 0$ if $y \neq x$. For any $\varphi$, we claim that $\varphi$ has a unique expression

One can consider these integers $k_i$ as the order of $x_i$, or simply the time that $x_i$ appears (may be negative). For $\varphi\in\mathbb{Z}\langle S \rangle$, let $I=\{x_1,x_2,\cdots,x_n\}$ be the set of elements of $S$ such that $\varphi(x_i) \neq 0$. If we denote $k_i=\varphi(x_i)$, we can show that $\psi=k_1 \cdot x_1 + k_2 \cdot x_2 + \cdots + k_n \cdot x_n$ is equal to $\varphi$. For $x \in I$, we have $\psi(x)=k$ for some $k=k_i\neq 0$ by definition of the ‘$\cdot$’; if $y \notin I$ however, we then have $\psi(y)=0$. This coincides with $\varphi$. $\blacksquare$

By definition the zero map $\mathcal{O}=0 \cdot x \in \mathbb{Z}\langle S \rangle$ and therefore we may write any $\varphi$ by

where $k_x \in \mathbb{Z}$ and can be zero. Suppose now we have two expressions, for example

Then

Suppose $k_y - k_y’ \neq 0$ for some $y \in S$, then

which is a contradiction. Therefore the expression is unique. $\blacksquare$

This $\mathbb{Z}\langle S \rangle$ is what we are looking for. It is an additive group (which can be proved immediately) and, what is more important, every element can be expressed as a ‘sum’ associated with finite number of elements of $S$. We shall write $F_{ab}(S)=\mathbb{Z}\langle S \rangle$, and call it the free abelian group generated by $S$. For elements in $S$, we say they are free generators of $F_{ab}(S)$. If $S$ is a finite set, we say $F_{ab}(S)$ is finitely generated.

An abelian group is free if and only if it is isomorphic to a free abelian group $F_{ab}(S)$ for some set $S$.

Proof. First we shall show that $F_{ab}(S)$ is free. For $x \in M$, we denote $\varphi = 1 \cdot x$ by $[x]$. Then for any $k \in \mathbb{Z}$, we have $k[x]=k \cdot x$ and $k[x]+k’[y] = k\cdot x + k’ \cdot y$. By definition of $F_{ab}(S)$, any element $\varphi \in F_{ab}(S)$ has a unique expression

Therefore $F_{ab}(S)$ is free since we have found the basis $([x])_{x \in S}$.

Conversely, if $A$ is free, then it is immediate that its basis $(e_i)_{i \in I}$ generates $A$. Our statement is therefore proved. $\blacksquare$

(Proposition 1) If $A$ is an abelian group, then there is a free group $F$ which has a subgroup $H$ such that $A \cong F/H$.

Proof. Let $S$ be any set containing $A$. Then we get a surjective map $\gamma: S \to A$ and a free group $F_{ab}(S)$. We also get a unique homomorphism $\gamma_\ast:F_{ab}(S) \to A$ by

which is also surjective. By the first isomorphism theorem, if we set $H=\ker(\gamma_\ast)$ and $F_{ab}(S)=F$, then

$\blacksquare$

(Proposition 2) If $A$ is finitely generated, then $F$ can also be chosen to be finitely generated.

Proof. Let $S$ be the generator of $A$, and $S’$ is a set containing $S$. Note if $S$ is finite, which means $A$ is finitely generated, then $S’$ can also be finite by inserting one or any finite number more of elements. We have a map from $S$ and $S’$ into $F_{ab}(S)$ and $F_{ab}(S’)$ respectively by $f_S(x)=1 \cdot x$ and $f_{S’}(x’)=1 \cdot x’$. Define $g=f_{S’} \circ \lambda:S’ \to F_{ab}(S)$ we get another homomorphism by

This defines a unique homomorphism such that $g_\ast \circ f_{S’} = g$. As one can also verify, this map is also surjective. Therefore by the first isomorphism theorem we have

$\blacksquare$

It’s worth mentioning separately that we have implicitly proved two statements with commutative diagrams:

(Proposition 3 | Universal property) If $g:S \to B$ is a mapping of $S$ into some abelian group $B$, then we can define a unique group-homomorphism making the following diagram commutative:

(Proposition 4) If $\lambda:S \to S$ is a mapping of sets, there is a unique homomorphism $\overline{\lambda}$ making the following diagram commutative:

(In the proof of Proposition 2 we exchanged $S$ an $S’$.)

(The Grothendieck group) Let $M$ be a commutative monoid written additively. We shall prove that there exists a commutative group $K(M)$ with a monoid homomorphism

satisfying the following universal property: If $f:M \to A$ is a homomorphism from $M$ into a abelian group $A$, then there exists a unique homomorphism $f_\gamma:K(M) \to A$ such that $f=f_\gamma\circ\gamma$. This can be represented by a commutative diagram:

Proof. There is a commutative diagram describes what we are doing.

Let $F_{ab}(M)$ be the free abelian group generated by $M$. For $x \in M$, we denote $1 \cdot x \in F_{ab}(M)$ by $[x]$. Let $B$ be the group generated by all elements of the type

where $x,y \in M$. This can be considered as a subgroup of $F_{ab}(M)$. We let $K(M)=F_{ab}(M)/B$. Let $i=x \to [x]$ and $\pi$ be the canonical map

We are done by defining $\gamma: \pi \circ i$. Then we shall verify that $\gamma$ is our desired homomorphism satisfying the universal property. For $x,y \in M$, we have $\gamma(x+y)=\pi([x+y])$ and $\gamma(x)+\gamma(y) = \pi([x])+\pi([y])=\pi([x]+[y])$. However we have

which implies that

Hence $\gamma$ is a monoid-homomorphism. Finally the universal property. By proposition 3, we have a unique homomorphism $f_\ast$ such that $f_\ast \circ i = f$. Note if $y \in B$, then $f_\ast(y) =0$. Therefore $B \subset \ker{f_\ast}$ Therefore we are done if we define $f_\gamma(x+B)=f_\ast (x)$. $\blacksquare$

Why such a $B$? Note in general $[x+y]$ is not necessarily equal to $[x]+[y]$ in $F_{ab}(M)$, but we don’t want it to be so. So instead we create a new equivalence relation, by factoring a subgroup generated by $[x+y]-[x]-[y]$. Therefore in $K(M)$ we see $[x+y]+B = [x]+[y]+B$, which finally makes $\gamma$ a homomorphism. We use the same strategy to generate the tensor product of two modules later. But at that time we have more than one relation to take care of.

If for all $x,y,z \in M$, $x+y=x+z$ implies $y=z$, then we say $M$ is a cancellative monoid, or the cancellation law holds in $M$. Note for the proof above we didn’t use any property of cancellation. However we still have an interesting property for cancellation law.

(Theorem) The cancellation law holds in $M$ if and only if $\gamma$ is injective.

Proof. This proof involves another approach to the Grothendieck group. We consider pairs $(x,y) \in M \times M$ with $x,y \in M$. Define

Then we get a equivalence relation (try to prove it yourself!). We define the addition component-wise, that is, $(x,y)+(x’,y’)=(x+x’,y+y’)$, then the equivalence classes of pairs form a group $A$, where the zero element is $[(0,0)]$. We have a monoid-homomorphism

If cancellation law holds in $M$, then

Hence $f$ is injective. By the universal property of the Grothendieck group, we get a unique homomorphism $f_\gamma$ such that $f_\gamma \circ \gamma = f$. If $x \neq 0$ in $M$, then $f_\gamma \circ \gamma(x) \neq 0$ since $f$ is injective. This implies $\gamma(x) \neq 0$. Hence $\gamma$ is injective.

Conversely, if $\gamma$ is injective, then $i$ is injective (this can be verified by contradiction). Then we see $f=f_\ast \circ i$ is injective. But $f(x)=f(y)$ if and only if $x+\ell = y+\ell$, hence $x+ \ell = y+ \ell$ implies $x=y$, the cancellation law holds on $M$.

Our first example is $\mathbb{N}$. Elements of $F_{ab}(\mathbb{N})$ are of the form

For elements in $B$ they are generated by

which we wish to represent $0$. Indeed, $K(\mathbb{N}) \simeq \mathbb{Z}$ since if we have a homomorphism

For $r \in \mathbb{Z}$, we see $f(1 \cdot r+B)=r$. On the other hand, if $\sum_{j=1}^{m}k_j \cdot n_j \not\in B$, then its image under $f$ is not $0$.

In the first example we ‘granted’ the natural numbers ‘subtraction’. Next we grant the division on multiplicative monoid.

Consider $M=\mathbb{Z} \setminus 0$. Now for $F_{ab}(M)$ we write elements in the form

which denotes that $\varphi(n_j)=k_j$ and has no other differences. Then for elements in $B$ they are generated by

which we wish to represent $1$. Then we see $K(M) \simeq \mathbb{Q} \setminus 0$ if we take the isomorphism

Of course this is not the end of the Grothendieck group. But for further example we may need a lot of topology background. For example, we have the topological $K$-theory group of a topological space to be the Grothendieck group of isomorphism classes of topological vector bundles. But I think it is not a good idea to post these examples at this timing.

A long exact sequence of cohomology groups (zig-zag and diagram-chasing)

(This section is intended to introduce the background. Feel free to skip if you already know exterior differentiation.)

There are several useful tools for vector calculus on $\mathbb{R}^3,$ namely gradient, curl, and divergence. It is possible to treat the gradient of a differentiable function $f$ on $\mathbb{R}^3$ at a point $x_0$ as the Fréchet derivative at $x_0$. But it does not work for curl and divergence at all. Fortunately there is another abstraction that works for all of them. It comes from differential forms.

Let $x_1,\cdots,x_n$ be the linear coordinates on $\mathbb{R}^n$ as usual. We define an algebra $\Omega^{\ast}$ over $\mathbb{R}$ generated by $dx_1,\cdots,dx_n$ with the following relations:

This is a vector space as well, and it’s easy to derive that it has a basis by

where $i<j<k$. The $C^{\infty}$ differential forms on $\mathbb{R}^n$ are defined to be the tensor product

As is can be shown, for $\omega \in \Omega^{\ast}(\mathbb{R}^n)$, we have a unique representation by

and in this case we also say $\omega$ is a $C^{\infty}$ $k$-form on $\mathbb{R}^n$ (for simplicity we also write $\omega=\sum f_Idx_I$). The algebra of all $k$-forms will be denoted by $\Omega^k(\mathbb{R}^n)$. And naturally we have $\Omega^{\ast}(\mathbb{R}^n)$ to be graded since

But if we have $\omega \in \Omega^0(\mathbb{R}^n)$, we see $\omega$ is merely a $C^{\infty}$ function. As taught in multivariable calculus course, for the differential of $\omega$ we have

and it turns out that $d\omega\in\Omega^{1}(\mathbb{R}^n)$. This inspires us to obtain a generalization onto the differential operator $d$:

and $d\omega$ is defined as follows. The case when $k=0$ is defined as usual (just the one above). For $k>0$ and $\omega=\sum f_I dx_I,$ $d\omega$ is defined ‘inductively’ by

This $d$ is the so-called exterior differentiation, which serves as the ultimate abstract extension of gradient, curl, divergence, etc. If we restrict ourself to $\mathbb{R}^3$, we see these vector calculus tools comes up in the nature of things.

Functions

$1$-forms

$2$-forms

The calculation is tedious but a nice exercise to understand the definition of $d$ and $\Omega^{\ast}$.

By elementary computation we are also able to show that $d^2\omega=0$ for all $\omega \in \Omega^{\ast}(\mathbb{R}^n)$ (Hint: $\frac{\partial^2 f}{\partial x_i \partial x_j}=\frac{\partial^2 f}{\partial x_j \partial x_i}$ but $dx_idx_j=-dx_idx_j$). Now we consider a vector field $\overrightarrow{v}=(v_1,v_2)$ of dimension $2$. If $C$ is an arbitrary simply closed smooth curve in $\mathbb{R}^2$, then we expect

to be $0$. If this happens (note the arbitrary of $C$), we say $\overrightarrow{v}$ to be a conservative field (path independent).

So when conservative? It happens when there is a function $f$ such that

This is equivalent to say that

If we use $C^{\ast}$ to denote the area enclosed by $C$, by Green’s theorem, we have

If you translate what you’ve learned in multivariable calculus course (path independence) into the language of differential form, you will see that the set of all conservative fields is precisely the image of $d_0:\Omega^0(\mathbb{R}^2) \to \Omega^1(\mathbb{R}^2)$. Also, they are in the kernel of the next $d_1:\Omega^1(\mathbb{R}^2) \to \Omega^2(\mathbb{R}^2)$. These $d$’s are naturally homomorphism, so it’s natural to discuss the factor group. But before that, we need some terminologies.

The complex $\Omega^{\ast}(\mathbb{R}^n)$ together with $d$ is called the de Rham complex on $\mathbb{R}^n$. Now consider the sequence

We say $\omega \in \Omega^k(\mathbb{R}^n)$ is closed if $d_k\omega=0$, or equivalently, $\omega \in \ker d_k$. Dually, we say $\omega$ is exact if there exists some $\mu \in \Omega^{k-1}(\mathbb{R}^n)$ such that $d\mu=\omega$, that is, $\omega \in \operatorname{im}d_{k-1}$. Of course all $d_k$’s can be written as $d$ but the index makes it easier to understand. Instead of doing integration or differentiation, which is ‘uninteresting’, we are going to discuss the abstract structure of it.

The $k$-th de Rham cohomology in $\mathbb{R}^n$ is defined to be the factor space

As an example, note that by the fundamental theorem of calculus, every $1$-form is exact, therefore $H_{DR}^1(\mathbb{R})=0$.

Since de Rham complex is a special case of differential complex, and other restrictions of de Rham complex plays no critical role thereafter, we are going discuss the algebraic structure of differential complex directly.

We are going to show that, there exists a long exact sequence of cohomology groups after a short exact sequence is defined. For the convenience let’s recall here some basic definitions

A sequence of vector spaces (or groups)

is said to be exact if the image of $f_{k-1}$ is the kernel of $f_k$ for all $k$. Sometimes we need to discuss a extremely short one by

As one can see, $f$ is injective and $g$ is surjective.

A direct sum of vector spaces $C=\oplus_{k \in \mathbb{Z}}C^k$ is called a differential complex if there are homomorphisms by

such that $d_{k-1}d_k=0$. Sometimes we write $d$ instead of $d_{k}$ since this differential operator of $C$ is universal. Therefore we may also say that $d^2=0$. The cohomology of $C$ is the direct sum of vector spaces $H(C)=\oplus_{k \in \mathbb{Z}}H^k(C) $ where

A map $f: A \to B$ where $A$ and $B$ are differential complexes, is called a chain map if we have $fd_A=d_Bf$.

Now consider a short exact sequence of differential complexes

where both $f$ and $g$ are chain maps (this is important). Then there exists a long exact sequence by

Here, $f^{\ast}$ and $g^{\ast}$ are the naturally induced maps. For $c \in C^q$, $d^{\ast}[c]$ is defined to be the cohomology class $[a]$ where $a \in A^{q+1}$, and that $f(a)=db$, and that $g(b)=c$. The sequence can be described using the two-layer commutative diagram below.

The long exact sequence is actually the purple one (you see why people may call this zig-zag lemma). This sequence is ‘based on’ the blue diagram, which can be considered naturally as an expansion of the short exact sequence. The method that will be used in the following proof is called diagram-chasing, whose importance has already been described by Professor James Munkres: master this. We will be abusing the properties of almost every homomorphism and group appeared in this commutative diagram to trace the elements.

First, we give a precise definition of $d^{\ast}$. For a closed $c \in C^q$, by the surjectivity of $g$ (note this sequence is exact), there exists some $b \in B^q$ such that $g(b)=c$. But $g(db)=d(g(b))=dc=0$, we see for $db \in B^{q+1}$ we have $db \in \ker g$. By the exactness of the sequence, we see $db \in \operatorname{im}{f}$, that is, there exists some $a \in A^{q+1}$ such that $f(a)=db$. Further, $a$ is closed since

and we already know that $f$ has trivial kernel (which contains $da$).

$d^{\ast}$ is therefore defined by

where $[\cdot]$ means “the homology class of”.

But it is expected that $d^{\ast}$ is a well-defined homomorphism. Let $c_q$ and $c_q’$ be two closed forms in $C^q$. To show $d^{\ast}$ is well-defined, we suppose $[c_q]=[c_q’]$ (i.e. they are homologous). Choose $b_q$ and $b_q’$ so that $g(b_q)=c_q$ and $g(b_q’)=c_q’$. Accordingly, we also pick $a_{q+1}$ and $a_{q+1}’$ such that $f(a_{q+1})=db_q$ and $f(a_{q+1}’)=db_q’$. By definition of $d^{\ast}$, we need to show that $[a_{q+1}]=[a_{q+1}’]$.

Recall the properties of factor group. $[c_q]=[c_q’]$ if and only if $c_q-c_q’ \in \operatorname{im}d$. Therefore we can pick some $c_{q-1} \in C^{q-1}$ such that $c_q-c_q’=dc_{q-1}$. Again, by the surjectivity of $g$, there is some $b_{q-1}$ such that $g(b_{q-1})=c_{q-1}$.

Note that

Therefore $b_q-b_q’-db_{q-1} \in \operatorname{im} f$. We are able to pick some $a_q \in A^{q}$ such that $f(a_q)=b_q-b_q’-db_{q-1}$. But now we have

Since $f$ is injective, we have $da_q=a_{q+1}-a_{q+1}’$, which implies that $a_{q+1}-a_{q+1}’ \in \operatorname{im}d$. Hence $[a_{q+1}]=[a_{q+1}’]$.

To show that $d^{\ast}$ is a homomorphism, note that $g(b_q+b_q’)=c_q+c_q’$ and $f(a_{q+1}+a_{q+1}’)=d(b_q+b_q’)$. Thus we have

The latter equals $[a_{q+1}]+[a_{q+1}’]$ since the canonical map is a homomorphism. Therefore we have

Therefore the long sequence exists. It remains to prove exactness. Firstly we need to prove exactness at $H^q(B)$. Pick $[b] \in H^q(B)$. If there is some $a \in A^q$ such that $f(a)=b$, then $g(f(a))=0$. Therefore $g^{\ast}[b]=g^{\ast}[f(a)]=[g(f(a))]=[0]$; hence $\operatorname{im}f \subset \ker g$.

Conversely, suppose now $g^{\ast}[b]=[0]$, we shall show that there exists some $[a] \in H^q(A)$ such that $f^{\ast}[a]=[b]$. Note $g^{\ast}[b]=\operatorname{im}d$ where $d$ is the differential operator of $C$ (why?). Therefore there exists some $c_{q-1} \in C^{q-1}$ such that $g(b)=dc_{q-1}$. Pick some $b_{q-1}$ such that $g(b_{q-1})=c_{q-1}$. Then we have

Therefore $f(a)=b-db_{q-1}$ for some $a \in A^q$. Note $a$ is closed since

and $f$ is injective. $db=0$ since we have

Furthermore,

Therefore $\ker g^{\ast} \subset \operatorname{im} f$ as desired.

Now we prove exactness at $H^q(C)$. (Notation:) pick $[c_q] \in H^q(C)$, there exists some $b_q$ such that $g(b_q)=c_q$; choose $a_{q+1}$ such that $f(a_{q+1})=db_q$. Then $d^{\ast}[c_q]=[a_{q+1}]$ by definition.

If $[c_q] \in \operatorname{im}g^{\ast}$, we see $[c_q]=[g(b_q)]=g^{\ast}[b_q]$. But $b_q$ is closed since $[b_q] \in H^q(B)$, we see $f(a_{q+1})=db_q=0$, therefore $d^{\ast}[c_q]=[a_{q+1}]=[0]$ since $f$ is injective. Therefore $\operatorname{im}g^{\ast} \subset \ker d^{\ast}$.

Conversely, suppose $d^{\ast}[c^q]=[0]$. By definition of $H^{q+1}(A)$, there is some $a_q \in A$ such that $da_q = a_{q+1}$ (can you see why?). We claim that $b_q-f(a_q)$ is closed and we have $[c_q]=g^{\ast}[b_q-f(a_q)]$.

By direct computation,

Meanwhile

Therefore $\ker d^{\ast} \subset \operatorname{im}g^{\ast}$. Note that $g(f(a_q))=0$ by exactness.

Finally, we prove exactness at $H^{q+1}(A)$. Pick $\alpha \in H^{q+1}(A)$. If $\alpha \in \operatorname{im}d^{\ast}$, then $\alpha=[a_{q+1}]$ where $f(a_{q+1})=db_q$ by definition. Then

Therefore $\alpha \in \ker f^{\ast}$. Conversely, if we have $f^{\ast}(\alpha)=[0]$, pick the representative element of $\alpha$, namely we write $\alpha=[a]$; then $[f(a)]=[0]$. But this implies that $f(a) \in \operatorname{im}d$ where $d$ denotes the differential operator of $B$. There exists some $b_{q+1} \in B^{q+1}$ and $b_q \in B^q$ such that $db_{q}=b_{q+1}$. Suppose now $c_q=g(b_q)$. $c_q$ is closed since $dc_q=g(db_q)=g(b_{q+1})=g(f(a))=0$. By definition, $\alpha=d^{\ast}[c_q]$. Therefore $\ker f^{\ast} \subset \operatorname{im}d^{\ast}$.

As you may see, almost every property of the diagram has been used. The exactness at $B^q$ ensures that $g(f(a))=0$. The definition of $H^q(A)$ ensures that we can simplify the meaning of $[0]$. We even use the injectivity of $f$ and the surjectivity of $g$.

This proof is also a demonstration of diagram-chasing technique. As you have seen, we keep running through the diagram to ensure that there is “someone waiting” at the destination.

This long exact group is useful. Here is an example.

By differential forms on a open set $U \subset \mathbb{R}^n$, we mean

And the de Rham cohomology of $U$ comes up in the nature of things.

We are able to compute the cohomology of the union of two open sets. Suppose $M=U \cup V$ is a manifold with $U$ and $V$ open, and $U \amalg V$ is the disjoint union of $U$ and $V$ (the coproduct in the category of sets). $\partial_0$ and $\partial_1$ are inclusions of $U \cap V$ in $U$ and $V$ respectively. We have a natural sequence of inclusions

Since $\Omega^{*}$ can also be treated as a contravariant functor from the category of Euclidean spaces with smooth maps to the category of commutative differential graded algebras and their homomorphisms, we have

By taking the difference of the last two maps, we have

The sequence above is a short exact sequence. Therefore we may use the zig-zag lemma to find a long exact sequence (which is also called the Mayer-Vietoris sequence) by

This sequence allows one to compute the cohomology of two union of two open sets. For example, for $H^{*}_{DR}(\mathbb{R}^2-P-Q)$, where $P(x_p,y_p)$ and $Q(x_q,y_q)$ are two distinct points in $\mathbb{R}^2$, we may write

and

Therefore we may write $M=\mathbb{R}^2$, $U=\mathbb{R}^2-P$ and $V=\mathbb{R}^2-Q$. For $U$ and $V$, we have another decomposition by

where

But

is a four-time (homeomorphic) copy of $\mathbb{R}^2$. So things become clear after we compute $H^{\ast}_{DR}(\mathbb{R}^2)$.

Cauchy sequence in group theory

Before we go into group theory, let’s recall how Cauchy sequence is defined in analysis.

A sequence $(x_n)_{n=1}^{\infty}$ of real/complex numbers is called a Cauchy sequence if, for every $\varepsilon>0$, there is a positive integer $N$ such that for all $m,n>N$, we have

That said, the distance between two numbers is always ‘too close’. Notice that only distance is involved, the definition of Cauchy sequence in metric space comes up in the natural of things.

Given a metric space $(X,d)$, a sequence $(x_n)_{n=1}^{\infty}$ is Cauchy if for every real number $\varepsilon>0$, there is a positive integer $N$ such that, for all $m,n>N$, the distance by

By considering the topology induced by metric, we see that $x_n$ lies in a neighborhood of $x_m$ with radius $\varepsilon$. But a topology can be constructed by neighborhood, hence the Cauchy sequence for topological vector space follows.

For a topological vector space $X$, pick a local base $\mathcal{B}$, then $(x_n)_{n=1}^{\infty}$ is a Cauchy sequence if for each member $U \in \mathcal{B}$, there exists some number $N$ such that for $m,n>N$, we have

But in a topological space, it’s not working. Consider two topological space by

with usual topology. We have $X \simeq Y$ since we have the map by

as a homeomorphism. Consider the Cauchy sequence $(\frac{1}{n+1})_{n=1}^{\infty}$, we see $(h(\frac{1}{n+1}))_{n=1}^{\infty}=(n+1)_{n=1}^{\infty}$ which is not Cauchy. This counterexample shows that being a Cauchy sequence is not preserved by homeomorphism.

Similarly, one can have a Cauchy sequence in a topological group (bu considering subtraction as inverse).

A sequence $(x_n)_{n=1}^{\infty}$ in a topological group $G$ is a Cauchy sequence if for every open neighborhood $U$ of the identity $G$, there exists some number $N$ such that whenever $m,n>N$, we have

A metric space $(X,d)$ where every Cauchy sequence converges is complete.

Spaces like $\mathbb{R}$, $\mathbb{C}$ are complete with Euclid metric. But consider the sequence in $\mathbb{Q}$ by

we have $a_n\in\mathbb{Q}$ for all $n$ but the sequence does not converge in $\mathbb{Q}$. Indeed in $\mathbb{R}$ we can naturally write $a_n \to e$ but $e \notin \mathbb{Q}$ as we all know.

There are several ways to construct $\mathbb{R}$ from $\mathbb{Q}$. One of the most famous methods is Dedekind’s cut. However you can find no explicit usage of Cauchy sequence. There is another method by using Cauchy sequence explicitly. We are following that way algebraically.

Suppose we are given a group $G$ with a sequence of normal subgroups $(H_n)_{n=1}^{\infty}$ with $H_n \supset H_{n+1}$ for all $n$, all of which has finite index. We are going to complete this group.

A sequence $(x_n)_{n=1}^{\infty}$ in $G$ will be called Cauchy sequence if given $H_k$, there exists some $N>0$ such that for $m,n>N$, we have

Indeed, this looks very similar to what we see in topological group, but we don’t want to grant a topology to the group anyway. This definition does not go to far from the original definition of Cauchy sequence in $\mathbb{R}$ as well. If you treat $H_k$ as some ‘small’ thing, it shows that $x_m$ and $x_n$ are close enough (by considering $x_nx_m^{-1}$ as their difference).

A sequence $(x_n)_{n=1}^{\infty}$ in $G$ will be called null sequence if given $k$, there exists some $N>0$ such that for all $n>N$, we have

or you may write $x_ne^{-1} \in H_k$. It can be considered as being arbitrarily close to the identity $e$.

The Cauchy sequences (of $G$) form a group under termwise product

Proof. Let $C$ be the set of Cauchy sequences, we shall show that $C$ forms a group. For $(x_1,x_2,\cdots),(y_1,y_2,\cdots)\in C$, the product is defined by

The associativity follows naturally from the associativity of $G$. To show that $(x_1y_1,x_2y_2,\cdots)$ is still a Cauchy sequence, notice that for big enough $m$, $n$ and some $k$, we have

But $(x_ny_n)(x_my_m)^{-1}=x_ny_ny_m^{-1}x_m^{-1}$. To show that this is an element of $H_k$, notice that

Since $y_ny_m^{-1}\in H_k$, $H_k$ is normal, we have $x_ny_ny_mx_n^{-1} \in H_k$. Since $x_nx_m^{-1} \in H_k$, $(x_ny_n)(x_my_m)^{-1}$ can be viewed as a product of two elements of $H_k$, therefore is an element of $H_k$.

Obviously, if we define $e_C=(e_G,e_G,\cdots)$, where $e_G$ is the identity of $G$, $e_C$ becomes the identity of $C$, since

Finally the inverse. We need to show that

is still an element of $C$. This is trivial since if we have

then

as $H_k$ is a group.

The null sequences (of $G$) form a group, further, it’s a normal subgroup of $C$, that is, the group of Cauchy sequences.

Let $N$ be the set of null sequences of $G$. Still, the identity is defined by $(e_G,e_G,\cdots)$, and there is no need to duplicate the validation. And the associativity still follows from $G$. To show that $N$ is closed under termwise product, namely if $(x_n),(y_n) \in N$, then $(x_ny_n)\in N$, one only need to notice that, for big $n$, we already have

Therefore $x_ny_n \in H_k$ since $x_n$ and $y_n$ are two elements of $H_k$.

To show that $(x_n^{-1})$, which should be treated as the inverse of $(x_n)$, is still in $N$, notice that if $x_n \in H_k$, then $x_n^{-1} \in H_k$.

Next, we shall show that $N$ is a subgroup of $C$, which is equivalent to show that every null sequence is Cauchy. Given $H_p \supset H_q$, for $(x_n)\in{N}$, there are some big enough $m$ and $n$ such that

therefore

as desired. Finally, pick $(p_n) \in N$ and $(q_n) \in C$, we shall show that $(q_n)(p_n)(q_n)^{-1} \in N$. That is, the sequence $(q_np_nq_n^{-1})$ is a null sequence. Given $H_k$, we have some big $n$ such that

therefore

since $H_k$ is normal. Our statement is proved.

The factor group $C/N$ is called the completion of $G$ (with respect to $(H_n)$).

As we know, the elements of $C/N$ are cosets. A coset can be considered as an element of $G$’s completion. Let’s head back to some properties of factor group. Pick $x,y \in C$, then $xN=yN$ if and only if $x^{-1}y \in N$. With that being said, two Cauchy sequences are equivalent if their ‘difference’ is a null sequence.

Informally, consider the addictive group $\mathbb{Q}$. There are two Cauchy sequence by

They are equivalent since

is a null sequence. That’s why people say $0.99999… = 1$ (in analysis, the difference is convergent to $0$; but in algebra, we say the two sequences are equivalent). Another example, $\ln{2}$ can be represented by the equivalent class of

We made our completion using Cauchy sequences. The completion is filled with some Cauchy sequence and some additions of ‘nothing’, whence the gap disappears.

Again, the sequence of normal subgroups does not have to be indexed by $\mathbb{N}$. It can be indexed by any directed partially ordered set, or simply partially ordered set. Removing the restriction of index set gives us a great variety of implementation.

However, can we finished everything about completing $\mathbb{Q}$ using this? The answer is, no - the multiplication is not verified! To finish this, field theory have to be taken into consideration.